The ISO 10012 standard on measurement management systems is about to be updated for the first time since 2003, with the new edition expected in early 2026. This update is more than just a technical refresh; it is widely viewed as an important milestone for industry. The revision is expected to increase the visibility and importance of measurement management systems, and many organizations, including suppliers in regulated or quality-critical sectors, may increasingly be required to demonstrate compliance with or even formal certification against ISO 10012 in the future.

The revision brings ISO 10012 in line with today’s management system structure and introduces a stronger, risk-based approach. It also adds valuable practical guidance on topics such as calibration interval optimization, measurement uncertainty, and decision rules. At the same time, the revision highlights the strategic role of measurement in ensuring confidence, compliance, and operational performance – not just in laboratories, but across entire organizations.

For calibration professionals, this means clearer requirements, improved alignment with standards like ISO 9001 and ISO 14001, and stronger tools to manage measurement-related risks in everyday work. The expected rise in certification activity may also elevate the status of measurement management as a core component of organizational quality systems.

Beamex has actively contributed to the development of this new edition, helping ensure that the practical needs of calibration professionals are represented.

In this article, we’ll explain what ISO 10012 is, why the update was needed, what’s new compared to the 2003 version, and – most importantly – what the changes mean in practice for people managing and performing calibrations.

This article also includes insights from one of the standard’s contributors, Alistair Norwood, along with a link to his detailed explanatory white paper.

Table of contents

- What is ISO 10012?

- Why a new edition now?

- A quick tour of the new structure

- What’s new and different in the 2026 edition

- What stays familiar

- Practical implications for different roles

- How ISO 10012 relates to ISO 9001, ISO 14001, and ISO/IEC 17025

- Key technical topics spotlight

1. Calibration interval optimization

2. Measurement uncertainty

3. Decision rules and measurement decision risk

- Migration checklist: moving from ISO 10012:2003 to ISO 10012:2026

- Beamex’s involvement in the update

- Expert insight: why the new ISO 10012 matters

- Download the related white paper

- How Beamex can help

- Frequently Asked Questions

- Conclusion

Looking for a deeper explanation of the new ISO 10012? Jump to the expert-written white paper section later in this article >

What is ISO 10012? (and a short version history)

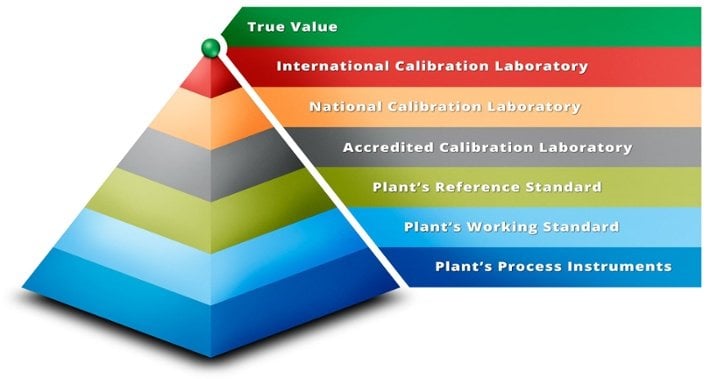

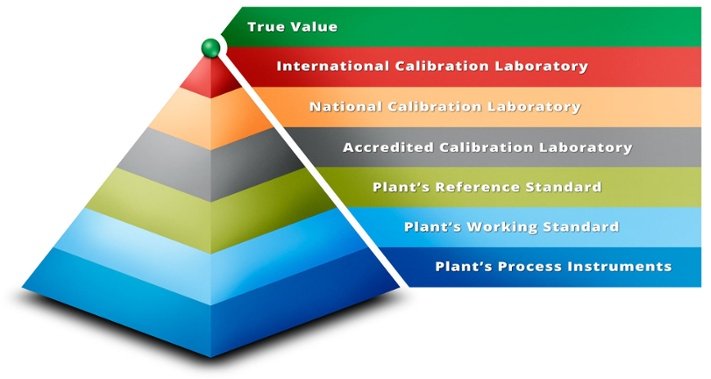

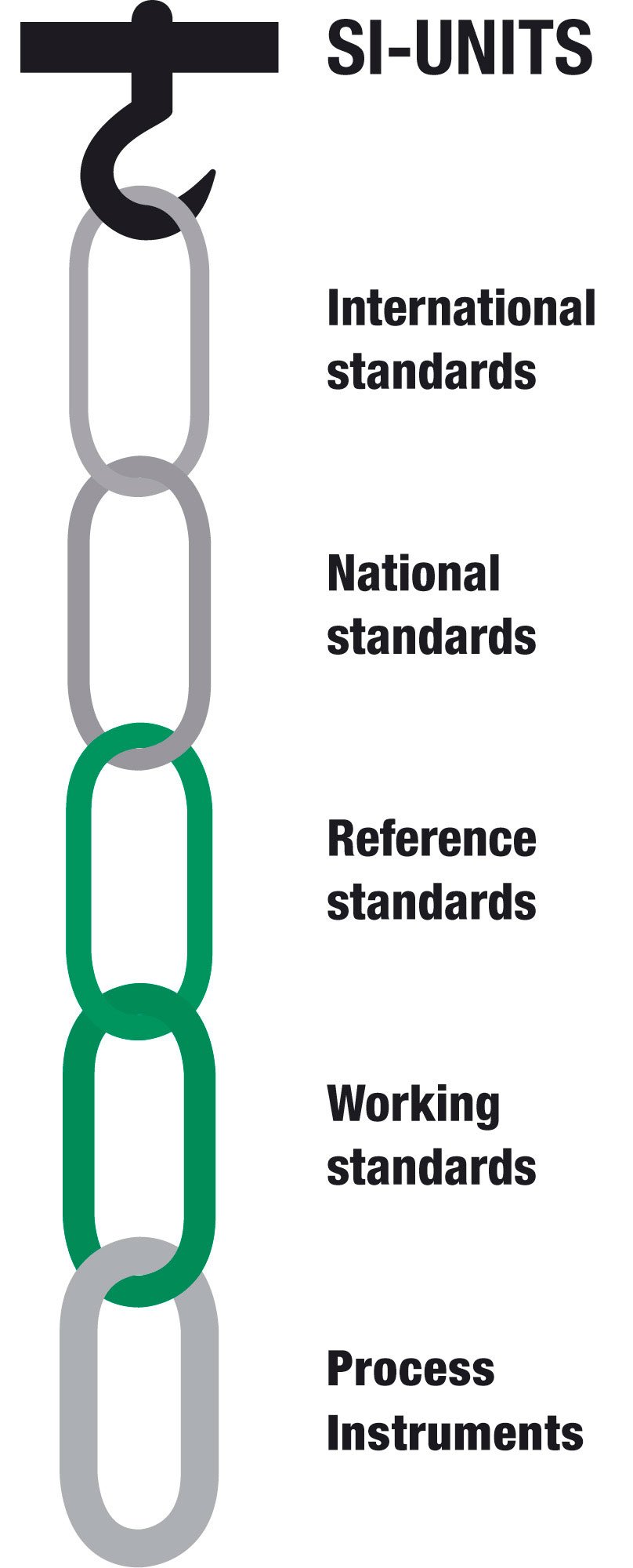

ISO 10012: Measurement management systems – Requirements for measurement processes and measuring equipment is the international standard for managing measurement activities. Its purpose is to ensure that measurements made within an organization are reliable, traceable, and suitable for their intended use. In practice, it provides a structured approach to managing measuring equipment, calibration, and measurement processes.

Version history:

- 1992: ISO 10012-1 introduced, focused on requirements for measuring equipment.

- 1997: ISO 10012-2 revision published, covering measurement processes.

- 2003: ISO 10012–1 and ISO 10012-2 consolidated into ISO 10012:2003, the version still in use today.

- 2026: A new edition modernizes the standard in line with current management system approaches and adds new practical guidance.

Why a new edition now?

Since 2003, industry and management system standards have both evolved significantly. ISO 9001 (Quality Management Systems) and ISO 14001 (Environmental Management Systems) have moved to a high-level structure (Annex SL) and emphasized risk-based thinking. Technology, digitalization, and data-driven calibration methods have also changed the way organizations manage measurement processes.

Updating ISO 10012 makes it:

- consistent with modern management system structures

- more practical for organizations that must demonstrate control of their measurement processes, and

- a potential basis for third-party certification, which was not emphasized in 2003.

A quick tour of the new structure

The 2003 edition had the following main sections:

- Management responsibility

- Resource management

- Metrological confirmation and realization of processes

- Analysis and improvement

The 2026 draft follows the modern Annex SL structure, with clauses 4–10:

- Context of the organization

- Leadership

- Planning

- Support

- Operation

- Performance evaluation

- Improvement

What’s new and different in the 2026 edition

- Risk-based approach: Risk is explicitly integrated into planning and operations. Organizations must identify and manage risks linked to incorrect measurement results.

- Context and leadership: New clauses emphasize understanding stakeholders and top management accountability.

- Design and development: Clearer expectations for how organizations design and validate measurement processes.

- Stronger link to other management systems: Easier integration with ISO 9001, ISO 14001, and others.

- Annexes with practical guidance:

- Calibration interval optimization

- Measurement uncertainty

- Decision rules and measurement decision risk

- Relationship to ISO/IEC 17025

- Certification possibility: The new edition can serve as a basis for third-party certification of a measurement management system.

What stays familiar

Despite the new structure, the essence of ISO 10012 remains:

- Ensure measurement results are valid and traceable.

- Keep measuring equipment under control through calibration and verification.

- Maintain competence of personnel.

- Control the environment where measurements take place.

- Manage data, records, and software used in measurements.

Practical implications for different roles

For quality and operations leaders:

- Ensure MMS objectives align with business goals.

- Include MMS performance in management reviews.

For metrology managers:

- Use new guidance on interval optimization and uncertainty to refine calibration programs.

- Define clear decision rules for pass/fail criteria.

For calibration technicians and engineers:

- Be aware of competence requirements and training needs.

- Apply improved practices for labeling, identification, and traceability of equipment.

For procurement/supplier quality:

- Evaluate and control externally provided calibration services.

How ISO 10012 relates to ISO 9001, ISO 14001, and ISO/IEC 17025

ISO 10012 is not a replacement for ISO 9001 or ISO 14001, but it supports them by ensuring reliable measurements that underpin quality and environmental performance.

- With ISO 9001 (Quality Management Systems): ISO 10012 helps ensure product conformity through valid measurements.

- With ISO 14001 (Environmental Management Systems): ISO 10012 ensures environmental data and monitoring are trustworthy.

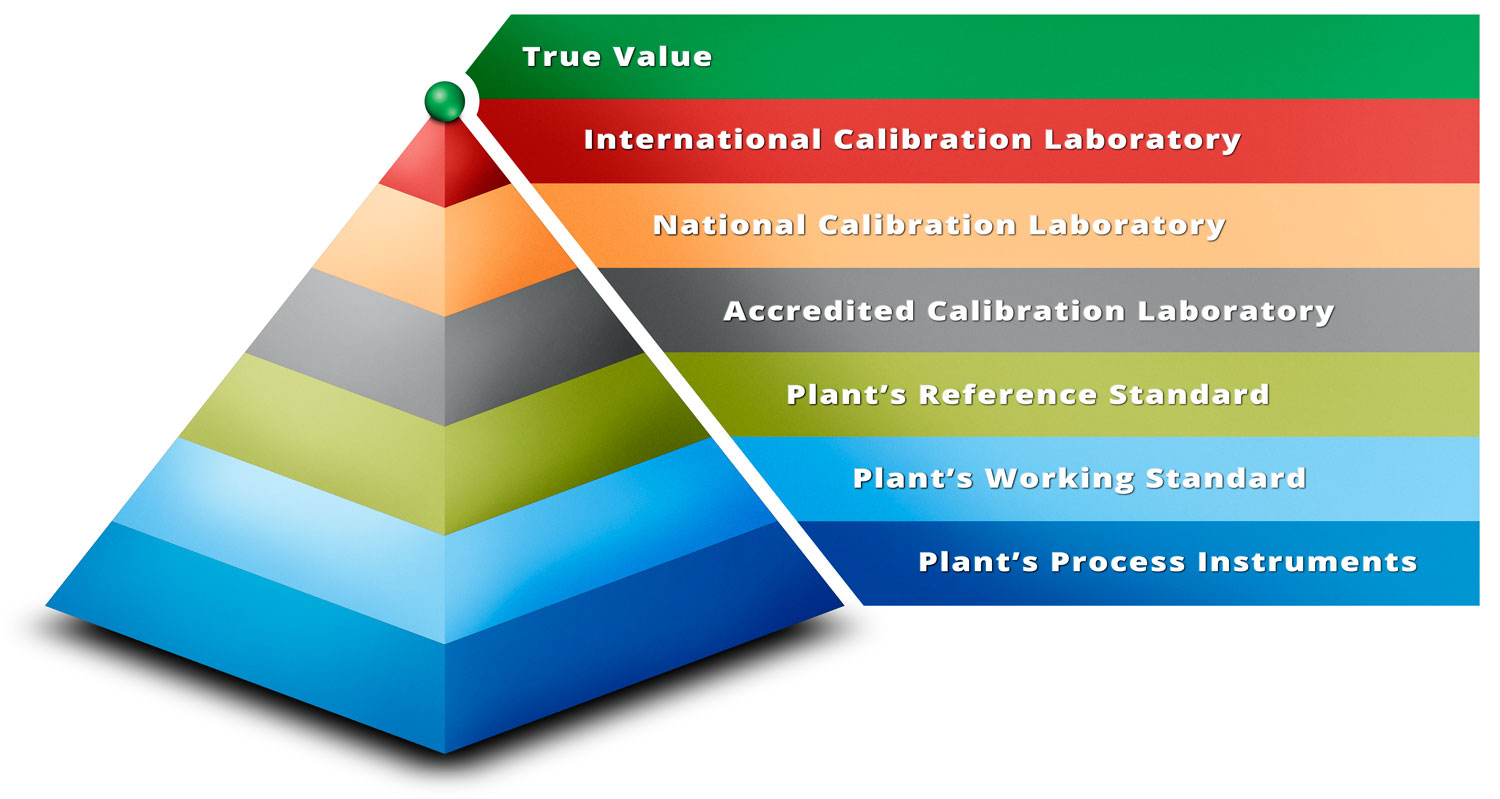

- With ISO/IEC 17025 (General requirements for the competence of testing and calibration laboratories): ISO 10012 complements lab competence requirements by covering organization-wide measurement management.

Key technical topics spotlight

1. Calibration interval optimization

The new edition of ISO 10012 adds valuable guidance on how to determine the most appropriate calibration intervals for measuring equipment. Instead of using fixed intervals (for example, every 12 months), organizations are encouraged to use a more analytical, data-driven approach. Several methods are highlighted:

- Fixed interval method: The simplest method, where equipment is calibrated at regular, predefined intervals regardless of performance history. Easy to apply but may lead to over or under-calibration.

- Drift analysis: Calibration intervals are adjusted based on observed drift in measurement results over time. If equipment shows stable performance, the interval may be extended.

- Periodicity ratio method: Uses historical data to compare how often instruments remain in tolerance versus out of tolerance and adjusts intervals accordingly.

- Opposite Error Rate Testing (OPPERET): A statistical method used to calculate calibration intervals by balancing the cost of calibration with the risk of measurement errors.

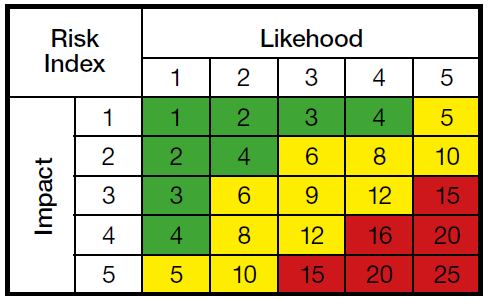

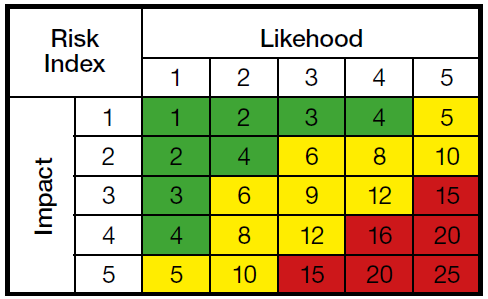

- Risk-based method: Considers the potential impact of incorrect measurement results on product quality, safety, compliance, or business performance. High-risk instruments may need shorter intervals, while low-risk instruments can have longer ones.

Practical impact: By moving away from a one-size-fits-all approach, organizations can reduce the number of unnecessary calibrations and save costs while maintaining confidence in measurement results. This makes calibration programs more efficient and defensible during audits.

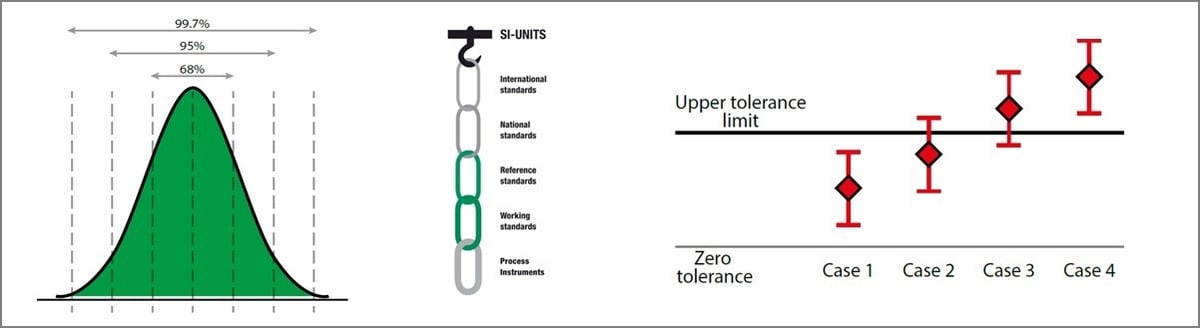

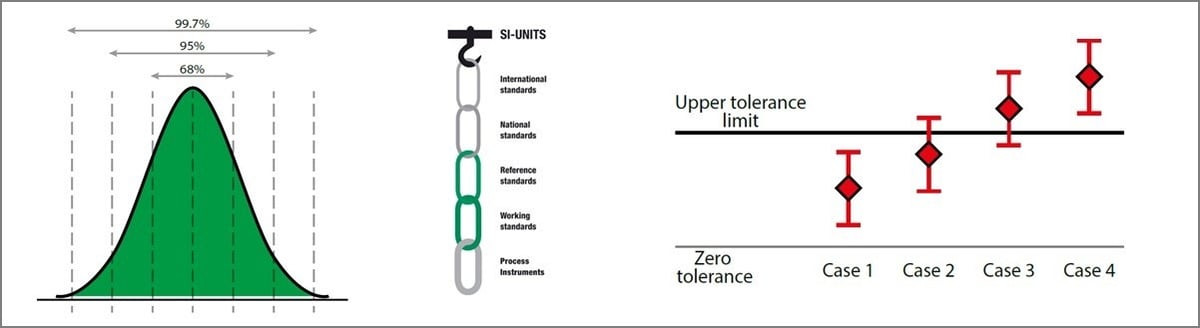

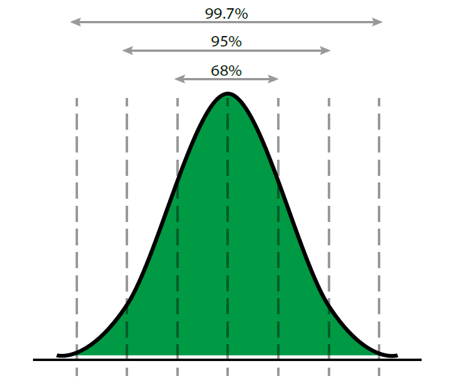

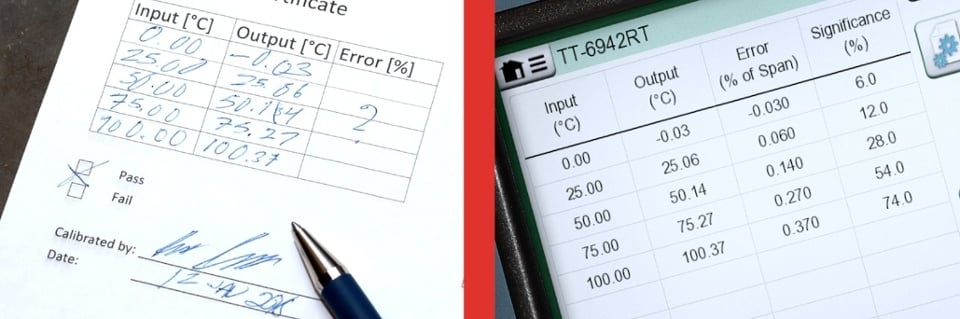

2. Measurement uncertainty

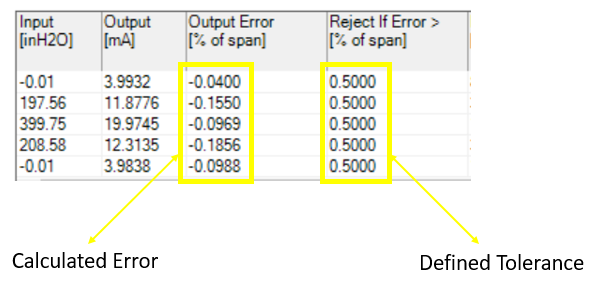

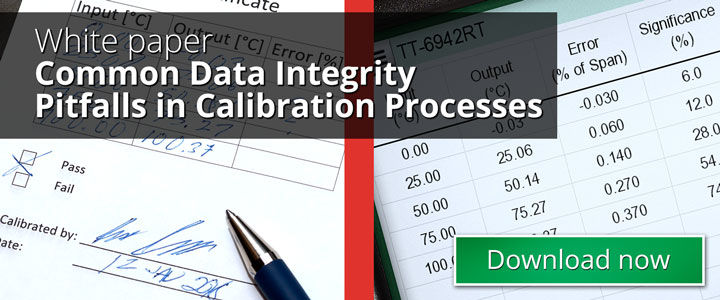

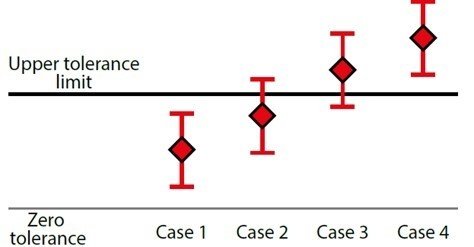

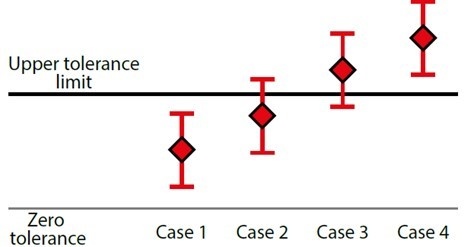

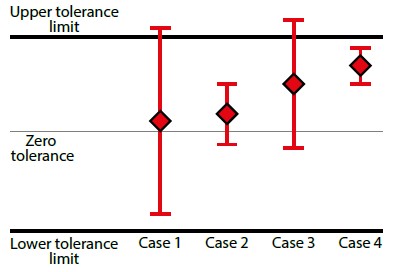

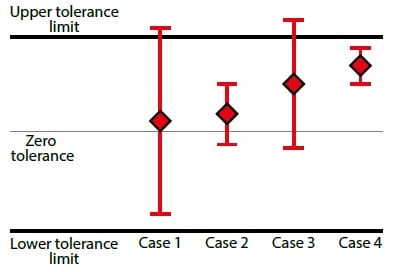

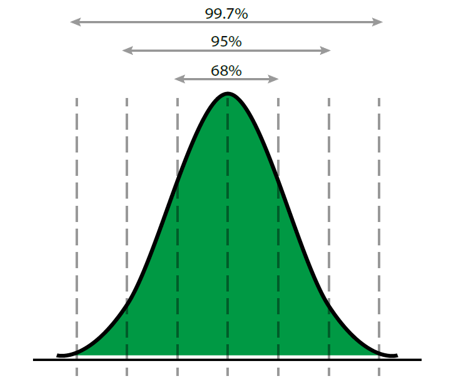

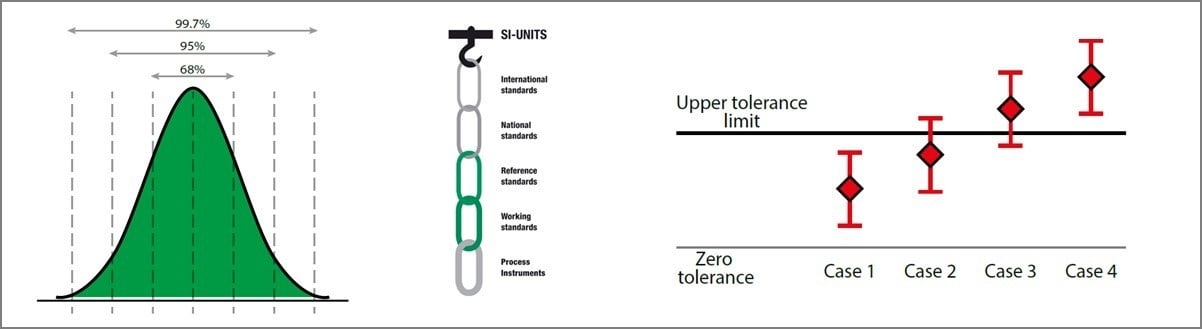

The standard offers concise guidance on how to calculate and apply measurement uncertainty in decision-making. Importantly, it emphasizes that pass/fail (conformity) decisions should not be made solely by comparing the measured value to the tolerance. Instead, the measurement uncertainty must be taken into account. This ensures that organizations properly manage the risk of false acceptance (declaring something in tolerance when it is not) and false rejection (declaring something out of tolerance when it is in fact acceptable).

One practical method is guard banding, where the acceptance limits are tightened by an amount related to the measurement uncertainty. This reduces the chance of false acceptance by ensuring only results that are clearly within tolerance are accepted.

Practical impact: Organizations can make better and more defensible decisions when results are borderline, avoiding both false confidence and unnecessary rejections.

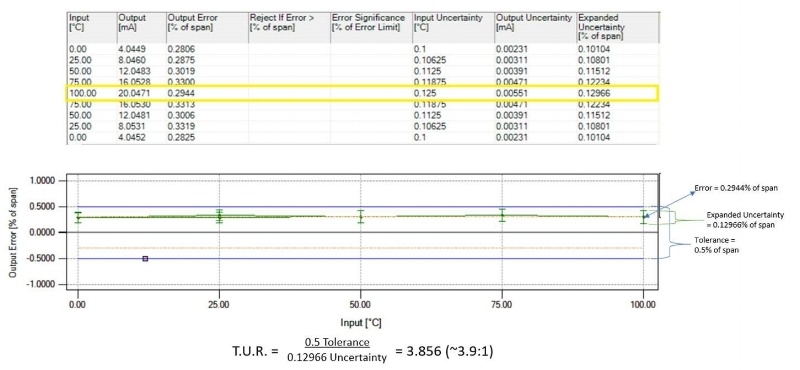

3. Decision rules and measurement decision risk

Annex D provides detailed guidance on how to establish decision rules and manage risks such as false accept and false reject. Organizations are expected to define and document the decision rule they use, and the rule should clearly state how uncertainty is factored into conformity assessment.

The annex also emphasizes the use of the Test Uncertainty Ratio (TUR). TUR is the ratio of the tolerance of the item being calibrated to the expanded uncertainty of the calibration process. In practice, a higher TUR means greater confidence that a measurement result close to the tolerance limit is still reliable.

Migration checklist: moving from ISO 10012:2003 to ISO 10012:2026

- Perform a gap assessment against clauses 4–10

- Update documentation

- Review risks related to measurement processes

- Refresh training and competence assessments

- Review calibration intervals and decision rules

- Evaluate external calibration suppliers

- Plan transition timeline (allow time for audits, reviews, and system updates)

Beamex’s involvement in the update

Beamex has been part of the ISO working group revising the standard, through the contribution of Christophe Boubay and Gautier Triboulloy, both working at Beamex France.

“The revision of ISO 10012 brings the standard in line with today’s management system practices and risk-based thinking. The aim has been to make it clearer and more practical for organizations that rely on accurate measurements in their operations. It brings metrology and calibration to the status they deserve, as a strong part of every industry’s quality system, supporting a safer and less uncertain world.”

“By adding guidance on topics like calibration interval optimization, uncertainty, and decision rules, the new edition gives calibration professionals stronger tools for managing risk and ensuring confidence in results.”

Christophe Boubay, Regional Sales Director, Beamex

Expert insight: why the new ISO 10012 matters

To help explain why the updated ISO 10012 is such a significant development, we asked Alistair Norwood, a long-standing contributor to the standard, to share his perspective.

Alistair is chair of BSI’s QS/1/3 Quality management – Supporting technologies and SS/6 Statistical procedures for measurement methods and results committees and contributor to the ISO/TC 176/SC 3/WG 27 responsible for the revision of ISO 10012. He works as a Subject Matter Expert in Metrology for Sellafield in Cumbria. Alistair is also a long term user of Beamex calibration ecosystem.

“The new measurement standard ISO 10012 doesn’t prescribe the procedures behind how an organisation makes a measurement it goes much further and defines the thinking and the company structure that must go into making a measurement. A washing machine manufacturer and a military valve supplier will reach different answers, but both must follow the same disciplined approach to risk and measurement integrity.”

“The standard is one of the biggest shifts in how a company carries out measurement. In the updated ISO 10012 measurement is no longer seen as only a laboratory activity. Measurement becomes a company-wide exercise, involving the whole company from management and design to procurement to production. Each area within an organization has a role in ensuring measurement integrity.”

Alistair Norwood, Contributor to ISO/TC 176/SC 3/WG 27 Revision of ISO 10012 and Metrology Subject Matter Expert, Sellafield

Get a deeper view of ISO 10012

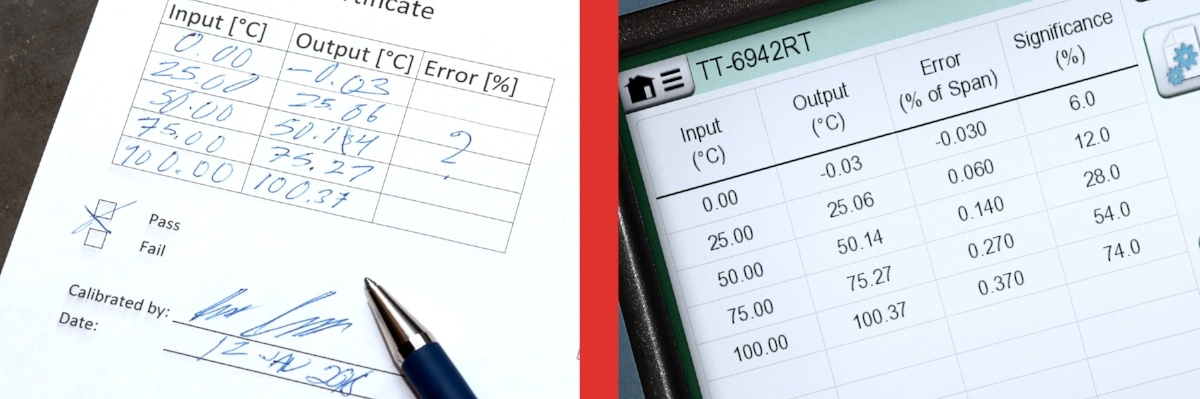

If you want a deeper understanding of the thinking behind the updated ISO 10012 edition, Alistair has prepared a comprehensive white paper that explains the standard in clear, practical language. This free, downloadable resource is available to anyone who wants a more in-depth view of the intent, structure and real-world application of the updated standard.

How Beamex can help

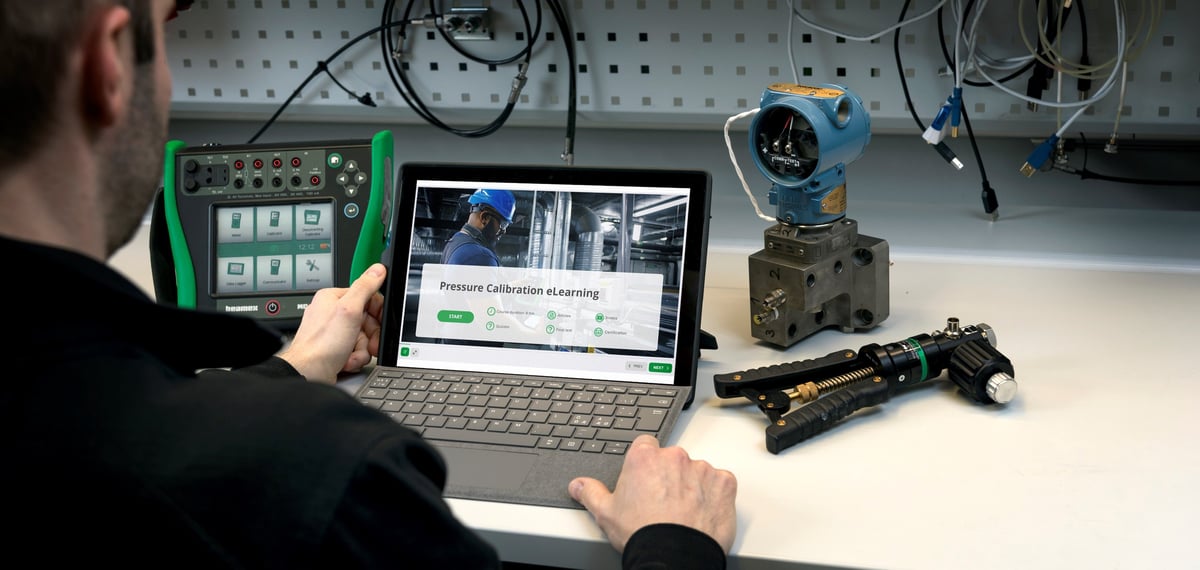

At Beamex, we provide a calibration ecosystem that helps organizations meet the requirements of ISO 10012 and beyond. For example:

- Calibration management software (LOGiCAL and CMX) supports requirements for documented information, traceability, analysis of measurement data, and management reviews.

- Portable and workshop calibrators provide reliable, traceable calibration of measuring equipment, fulfilling the requirement for control of measurement processes.

- Connectivity with CMMS/ERP systems ensures integration of calibration into wider business processes, aligning with the emphasis on context, leadership, and planning in ISO 10012.

- Services, training, and support help organizations maintain competence, which is a key requirement of the standard.

For readers who want to dive deeper into software selection, please see our free resource:

A Buyer's Guide to Calibration Management Software

Contact Beamex experts

If you need support in implementing the updated ISO 10012 standard, or want to talk through any calibration-related challenges, our experts are here to help. Get in touch with us to start the conversation.

Frequently asked questions

Do I need ISO/IEC 17025 if I follow ISO 10012?

Not necessarily. ISO/IEC 17025 is for testing/calibration laboratories, while ISO 10012 covers an organization-wide measurement management system.

Will certification become mandatory?

The standard itself doesn’t mandate certification, but the new edition makes third-party certification possible. Whether it becomes expected depends on industry requirements.

How do I set decision rules in practice?

You should define decision rules based on customer requirements, regulatory expectations, and acceptable risk levels. The annex provides guidance for doing this.

What data do I need for interval optimization?

You will need historical calibration data, drift analysis, and data on equipment usage patterns.

Conclusion

The new edition of ISO 10012 marks a significant step forward for calibration and measurement professionals. It makes the standard more relevant, more practical, and better aligned with modern management systems.

For organizations, the update is both a challenge and an opportunity: a challenge in terms of updating systems and processes and an opportunity to strengthen confidence in measurements, improve efficiency, and demonstrate competence.

Beamex is here to support your journey with expertise, tools, and services built around real calibration challenges.

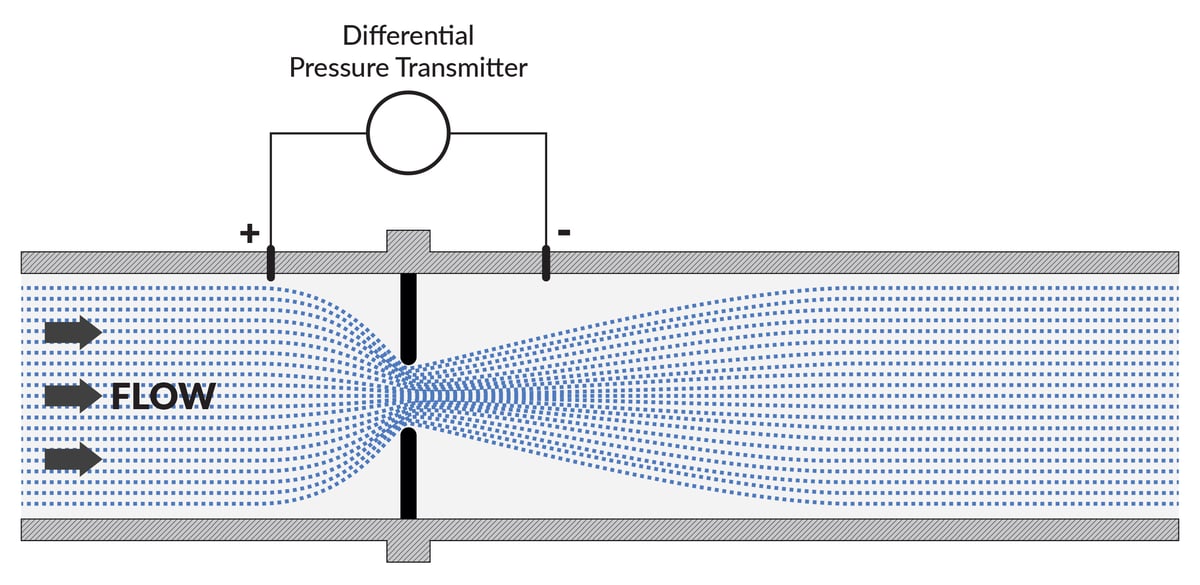

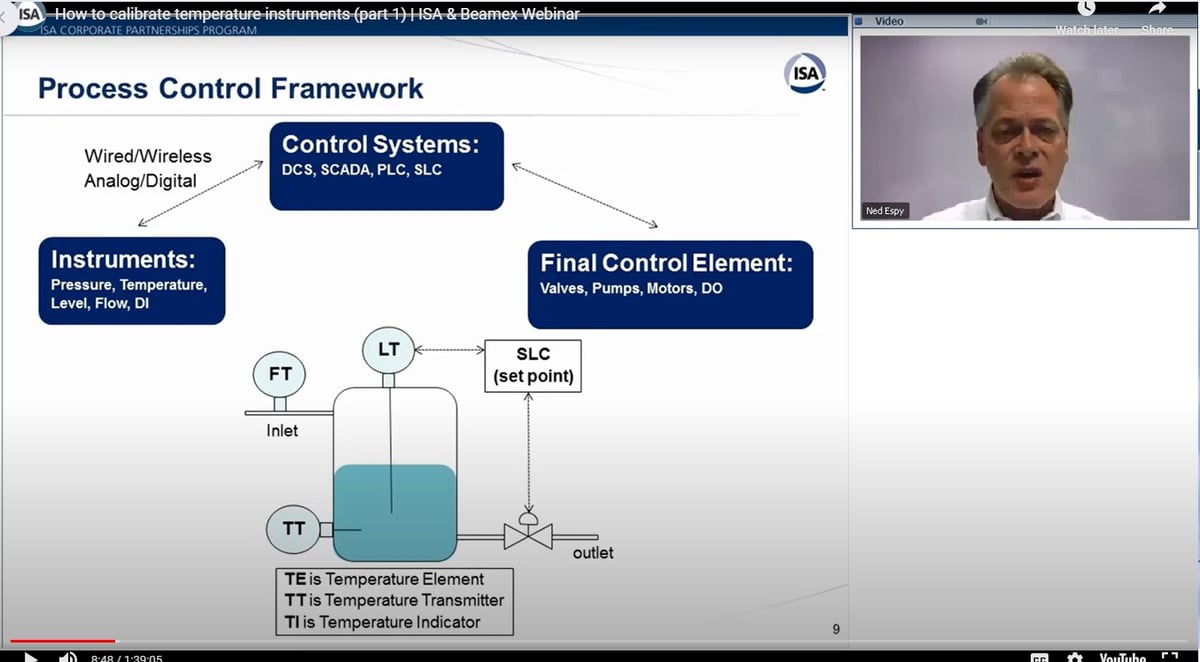

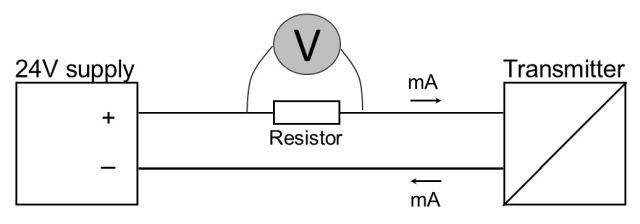

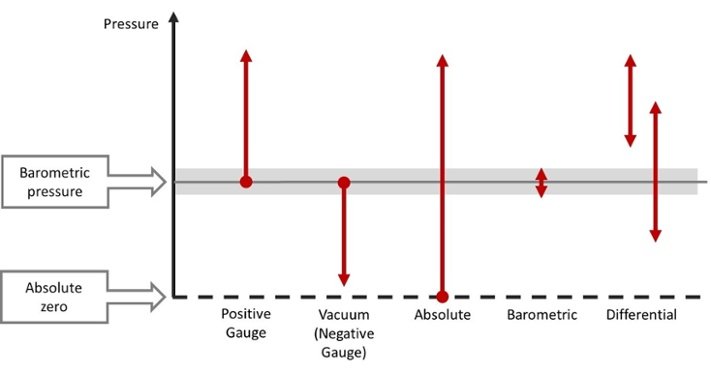

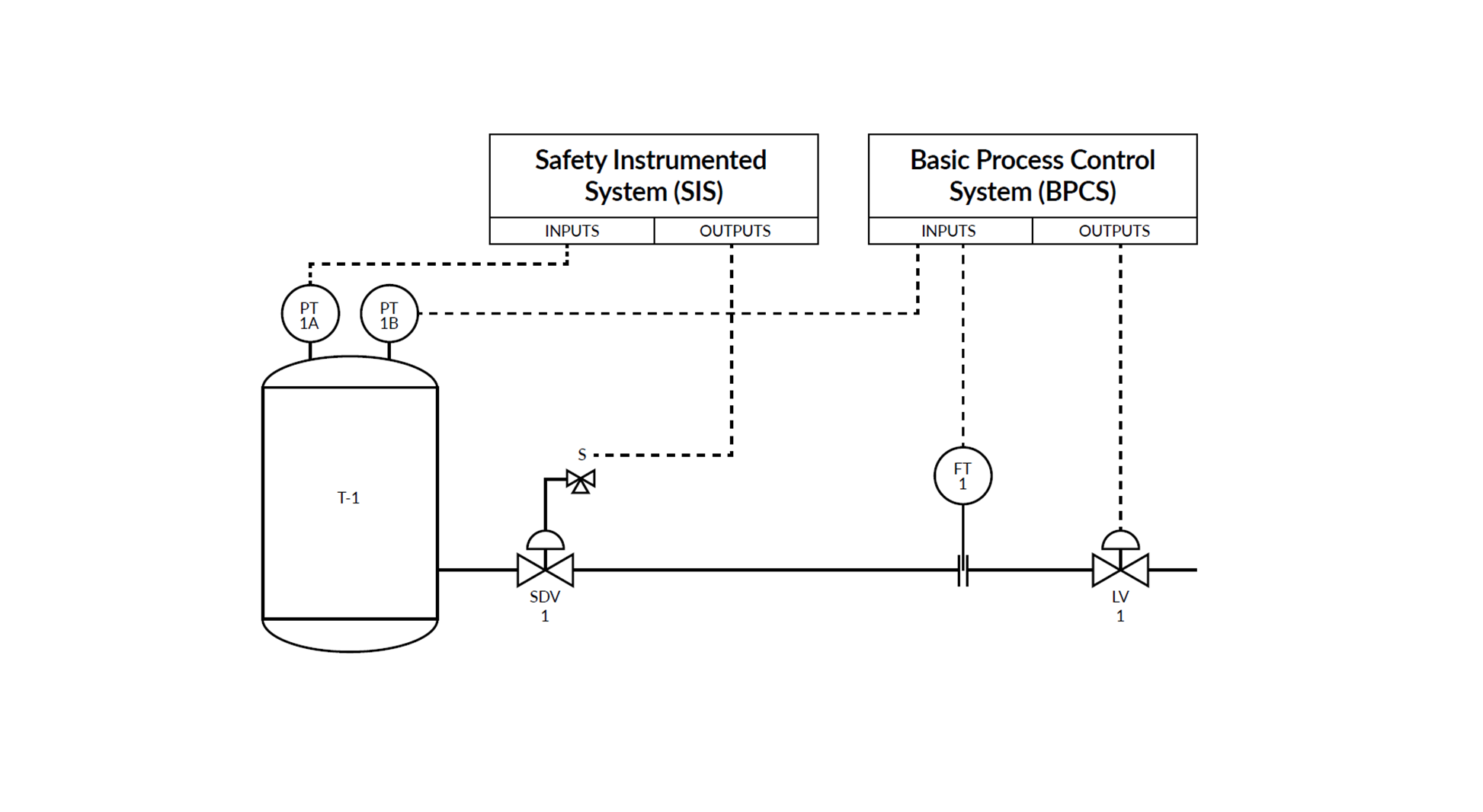

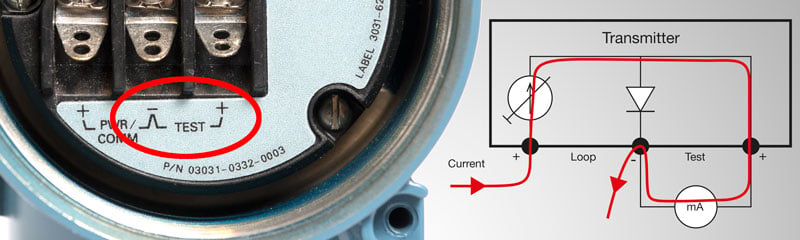

Differential pressure transmitters are widely used in process industries to measure pressure, flow, and level. In flow measurement, differential pressure (DP) transmitters are used to measure the pressure difference across a restriction, such as an orifice plate or Venturi tube.

Differential pressure transmitters are widely used in process industries to measure pressure, flow, and level. In flow measurement, differential pressure (DP) transmitters are used to measure the pressure difference across a restriction, such as an orifice plate or Venturi tube.

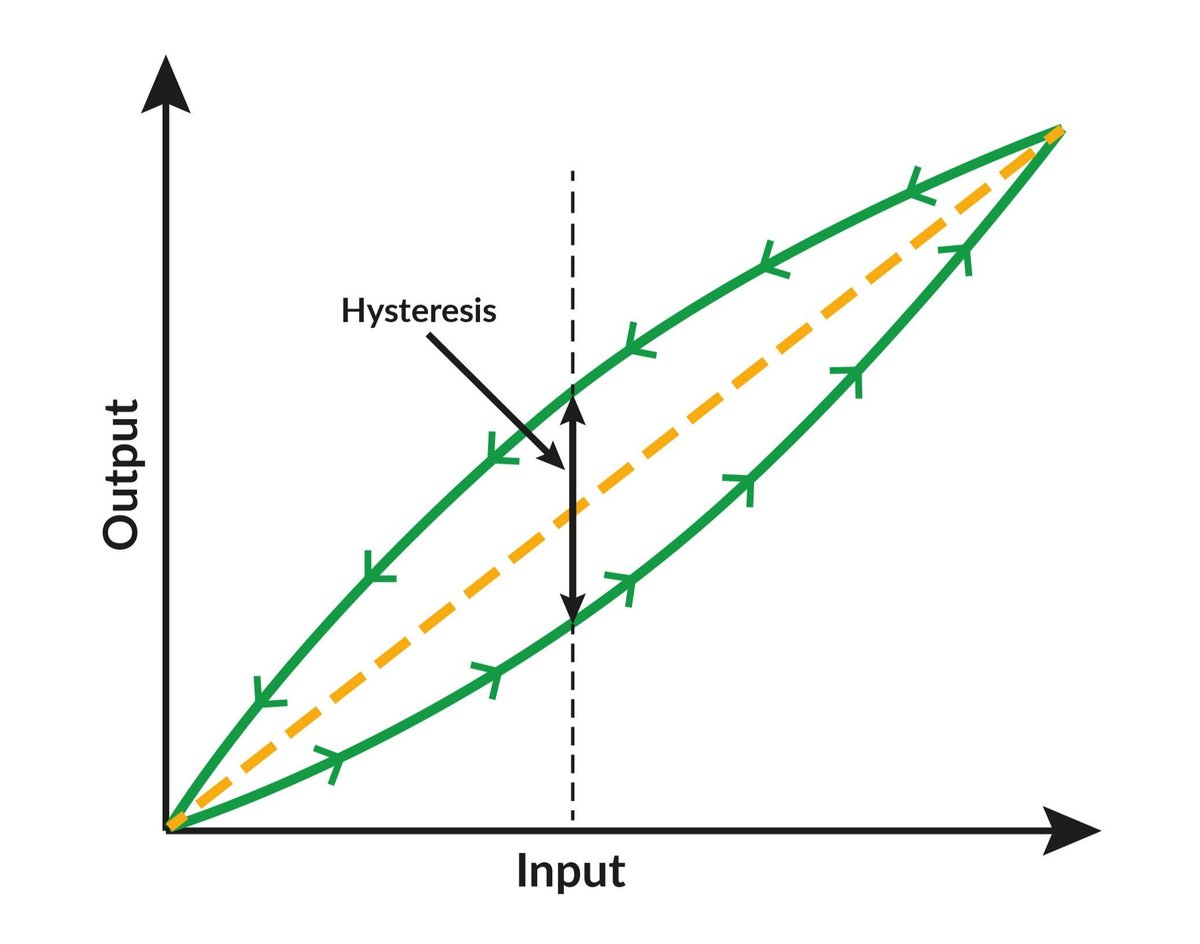

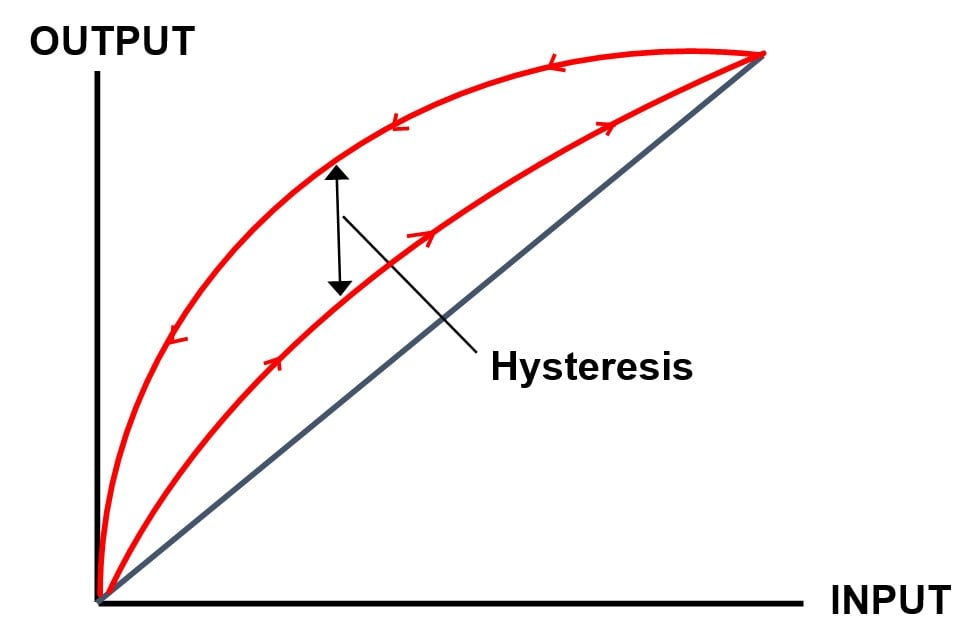

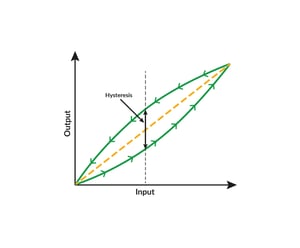

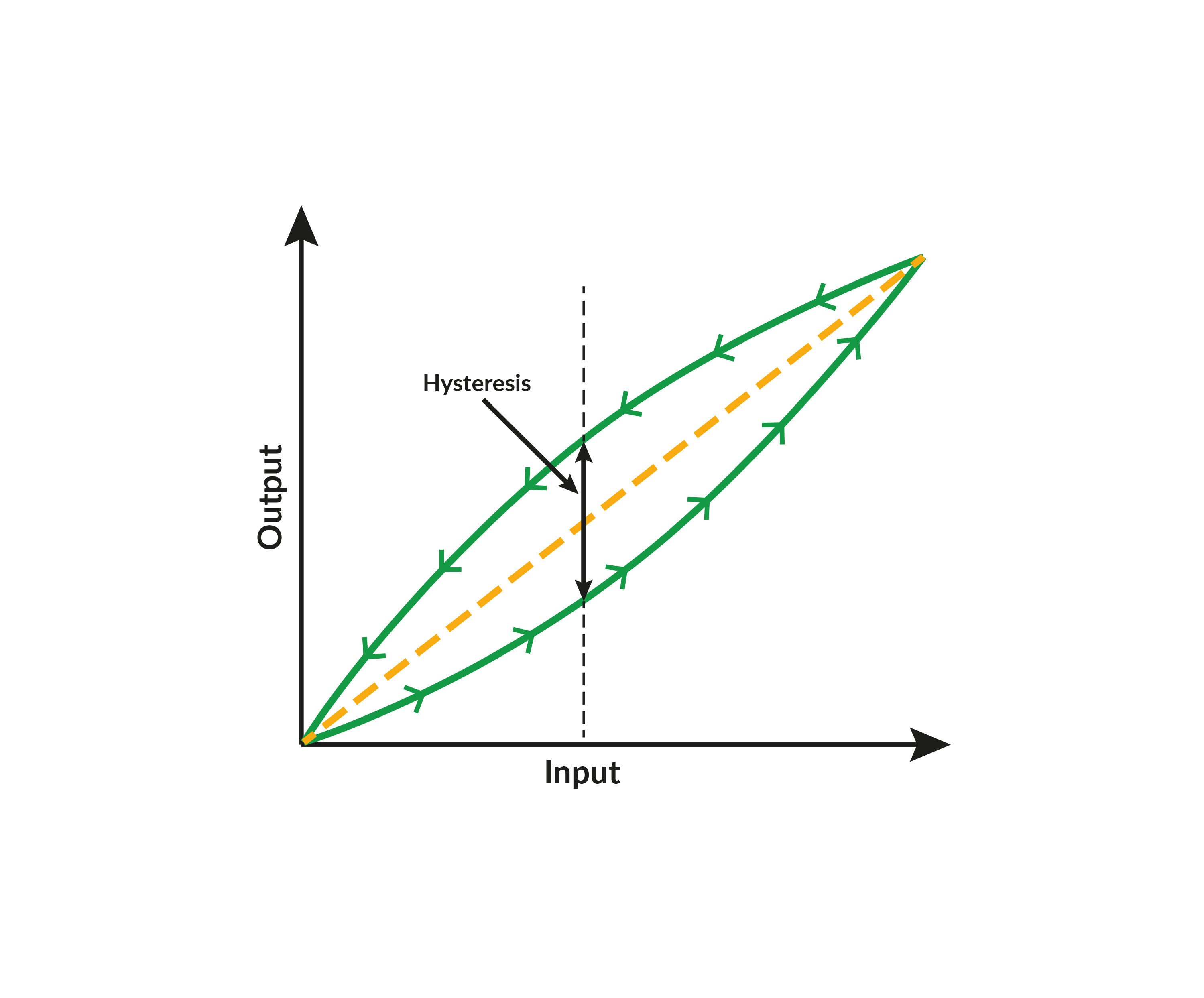

This above example illustrates how a differential pressure transmitter can behave differently when calibrated at atmospheric pressure compared to when the same differential pressure is applied under line pressure. The two curves show the deviation from the ideal value in each case. The difference between the two curves is the transmitter’s “footprint,” which needs to be taken into account when performing an on-site calibration without line pressure. In real life, there is typically also some hysteresis in the results, but for simplification that is not shown in this graphic.

This above example illustrates how a differential pressure transmitter can behave differently when calibrated at atmospheric pressure compared to when the same differential pressure is applied under line pressure. The two curves show the deviation from the ideal value in each case. The difference between the two curves is the transmitter’s “footprint,” which needs to be taken into account when performing an on-site calibration without line pressure. In real life, there is typically also some hysteresis in the results, but for simplification that is not shown in this graphic.

“It’s surprising to see how often plants are built without thinking of those who would have to come afterwards and prove/calibrate. I’ve seen for example a pressure test port aiming right at a wall with no clearance to connect.” Says

“It’s surprising to see how often plants are built without thinking of those who would have to come afterwards and prove/calibrate. I’ve seen for example a pressure test port aiming right at a wall with no clearance to connect.” Says

In the world of calibration management, choosing the right deployment model for your software makes all the difference. Whether your organization opts for a cloud-based or on-premises solution can significantly impact your operational efficiency, costs, and ability to adapt to future challenges.

In the world of calibration management, choosing the right deployment model for your software makes all the difference. Whether your organization opts for a cloud-based or on-premises solution can significantly impact your operational efficiency, costs, and ability to adapt to future challenges..png?width=1200&name=MC-family%20(1).png)

.png?width=1200&name=1998%20MC5%20(2).png)

At Beamex, we have a comprehensive range of solutions for

At Beamex, we have a comprehensive range of solutions for.png?width=1200&name=Blog%20size%20Customer%20service%20v2%20(1).png)

.png?width=1200&name=Blog%20size%20Customer%20service%20v2%20(2).png)

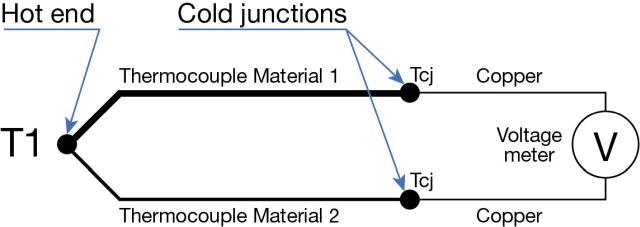

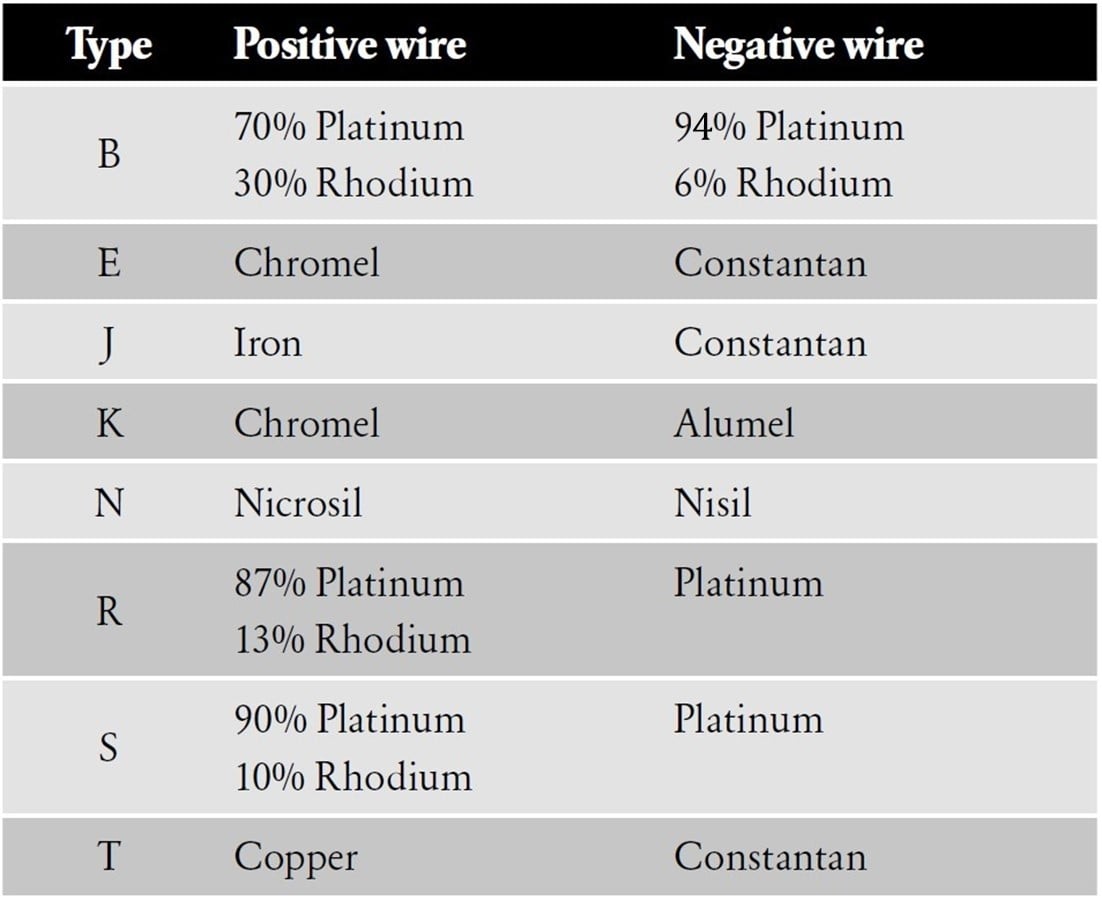

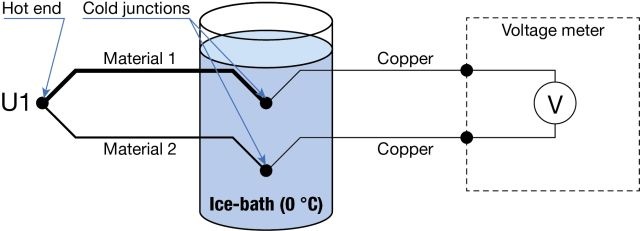

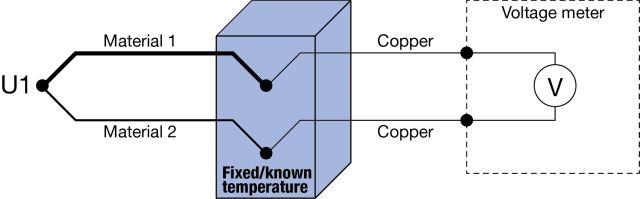

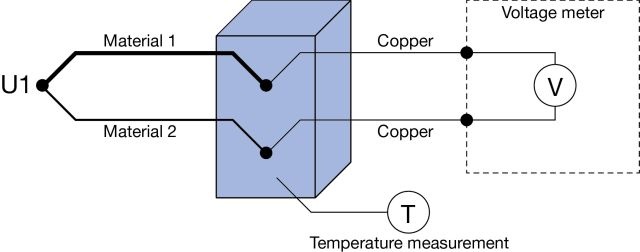

Simplified illustration of thermocouple cold junction.

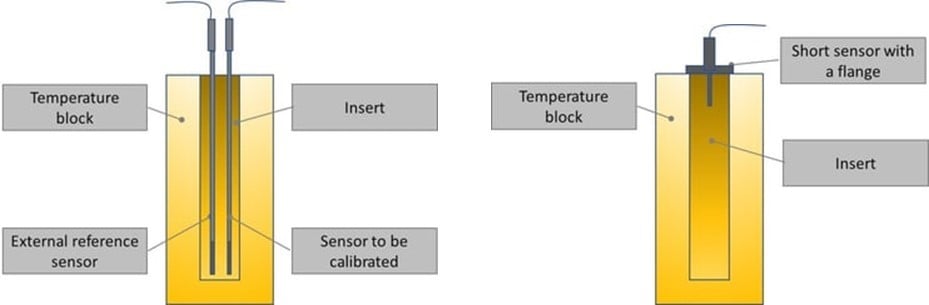

Simplified illustration of thermocouple cold junction. Sanitary temperature sensor calibration

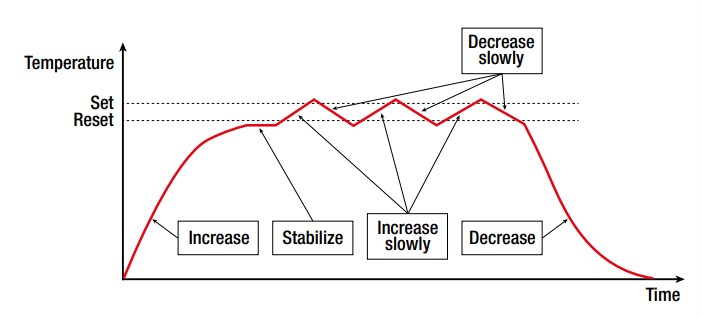

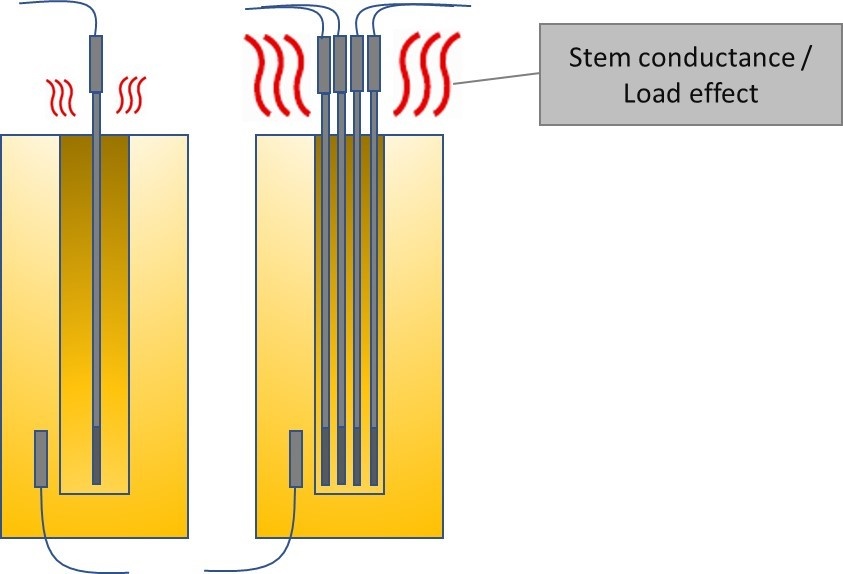

Sanitary temperature sensor calibration Temperature slope in temperature switch calibration.

Temperature slope in temperature switch calibration. Calibration uncertainty

Calibration uncertainty Webinar: How to calibrate temperature instruments.

Webinar: How to calibrate temperature instruments.

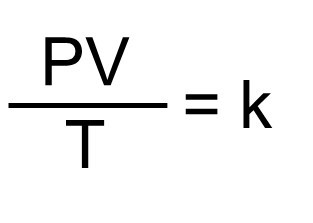

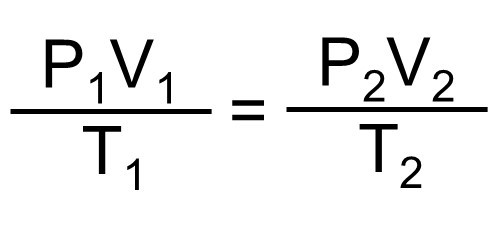

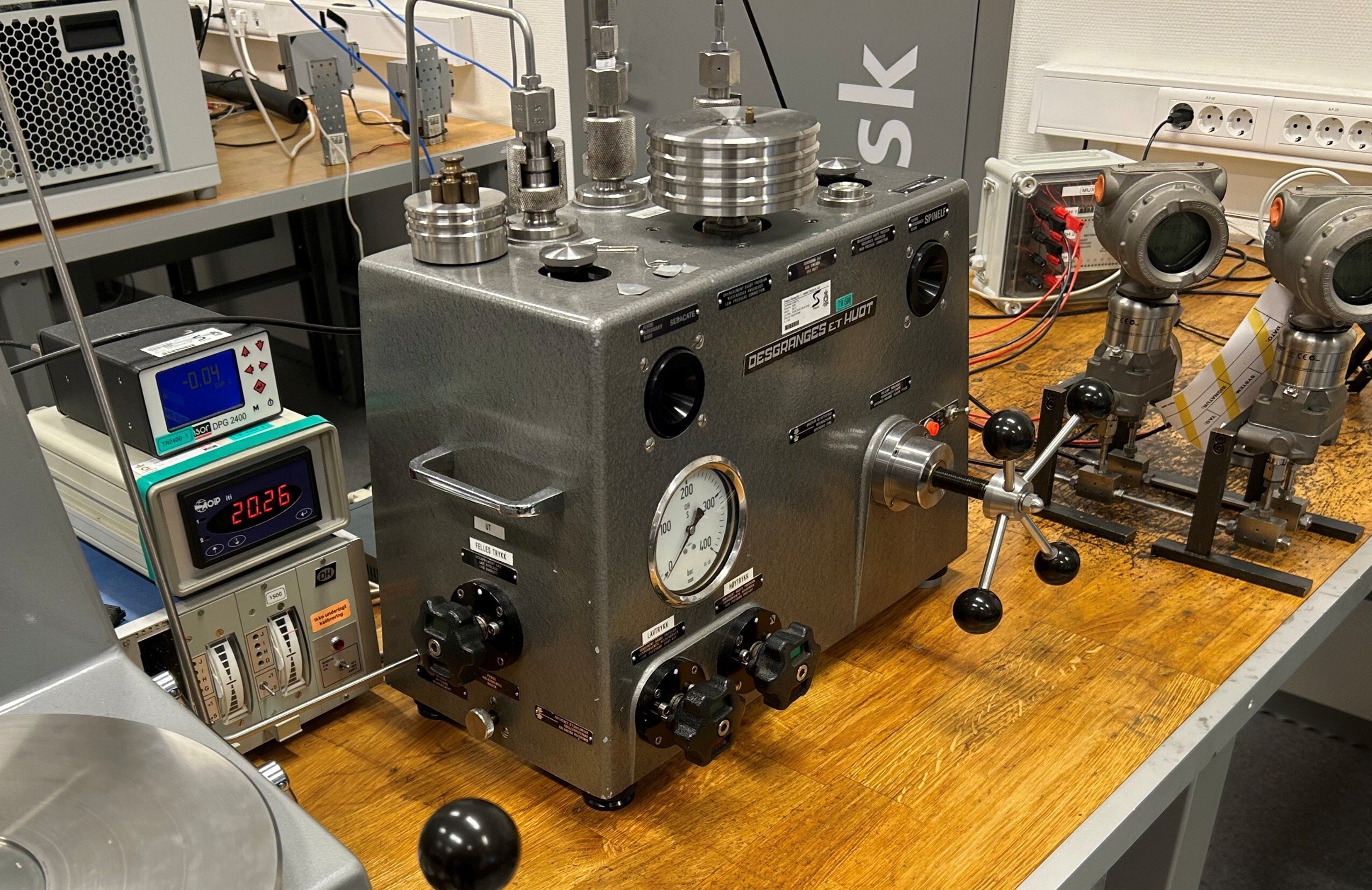

We can think of this formula as representing our normal pressure calibration system, having a closed, fixed volume. The two sides of the above formula represents two different stages in our system – one with a lower pressure and the second one with a higher pressure. For example, the left side (1) can be our system with no pressure, and the right side (2) the same system with high pressure applied.

We can think of this formula as representing our normal pressure calibration system, having a closed, fixed volume. The two sides of the above formula represents two different stages in our system – one with a lower pressure and the second one with a higher pressure. For example, the left side (1) can be our system with no pressure, and the right side (2) the same system with high pressure applied.

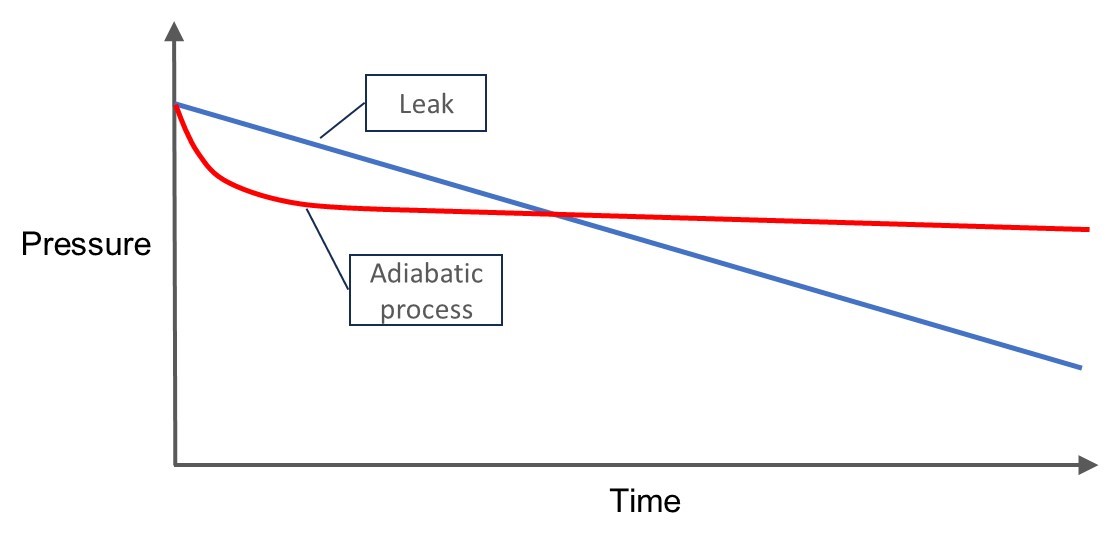

In the above image you can see how the pressure drop caused by the adiabatic process is first fast, but then slows down and eventually stabilizes (red line). While the pressure drop caused by a leak is linear (blue line).

In the above image you can see how the pressure drop caused by the adiabatic process is first fast, but then slows down and eventually stabilizes (red line). While the pressure drop caused by a leak is linear (blue line).

"With this software integration project, we were able to realize a significant return on investment during the first unit overhaul. It’s unusual, since ROI on software projects is usually nonexistent at first."

"With this software integration project, we were able to realize a significant return on investment during the first unit overhaul. It’s unusual, since ROI on software projects is usually nonexistent at first."

The Beamex solution has not been a hard sell at the compression station sites. “Beamex was very proactive in organizing online training for our teams, but the uptake was less than we expected because they are so easy to use that instead of asking basic questions during the training, our technicians were teaching themselves and quizzing the Beamex team on some fairly in-depth issues instead,” James Jepson says.

The Beamex solution has not been a hard sell at the compression station sites. “Beamex was very proactive in organizing online training for our teams, but the uptake was less than we expected because they are so easy to use that instead of asking basic questions during the training, our technicians were teaching themselves and quizzing the Beamex team on some fairly in-depth issues instead,” James Jepson says.

.png?width=1200&name=Calibration%20Software%20(1).png)

.png?width=1200&name=Black%20and%20Grey%20Bordered%20Travel%20Influencer%20YouTube%20Thumbnail%20Set%20(1).png)

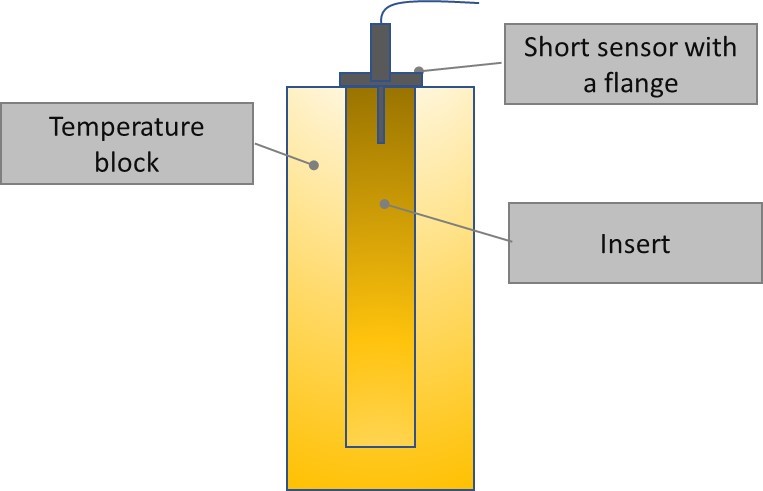

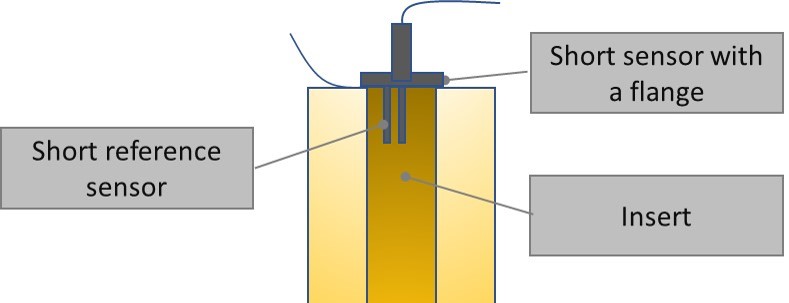

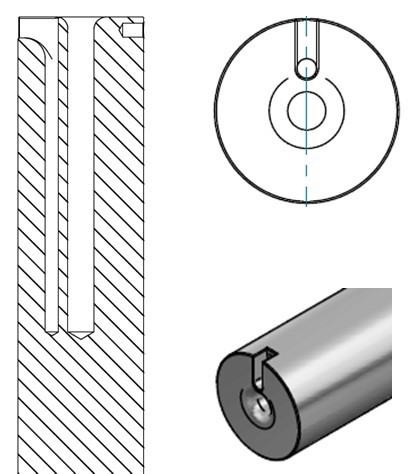

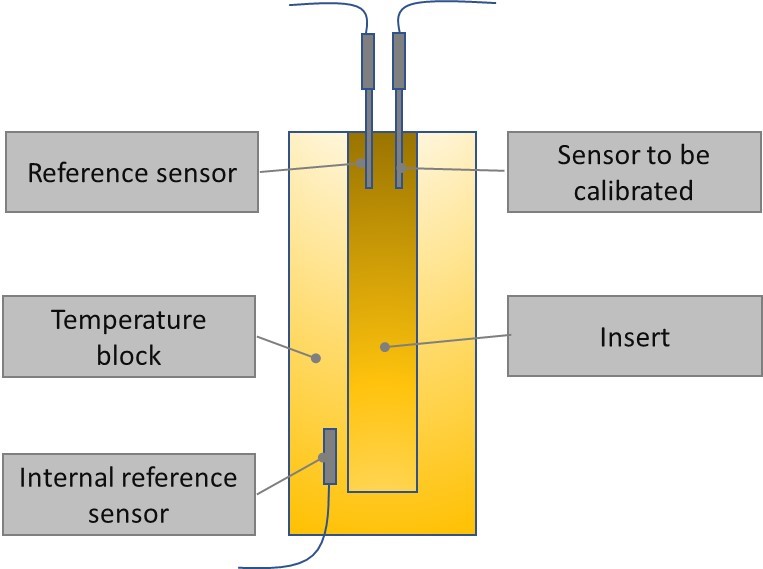

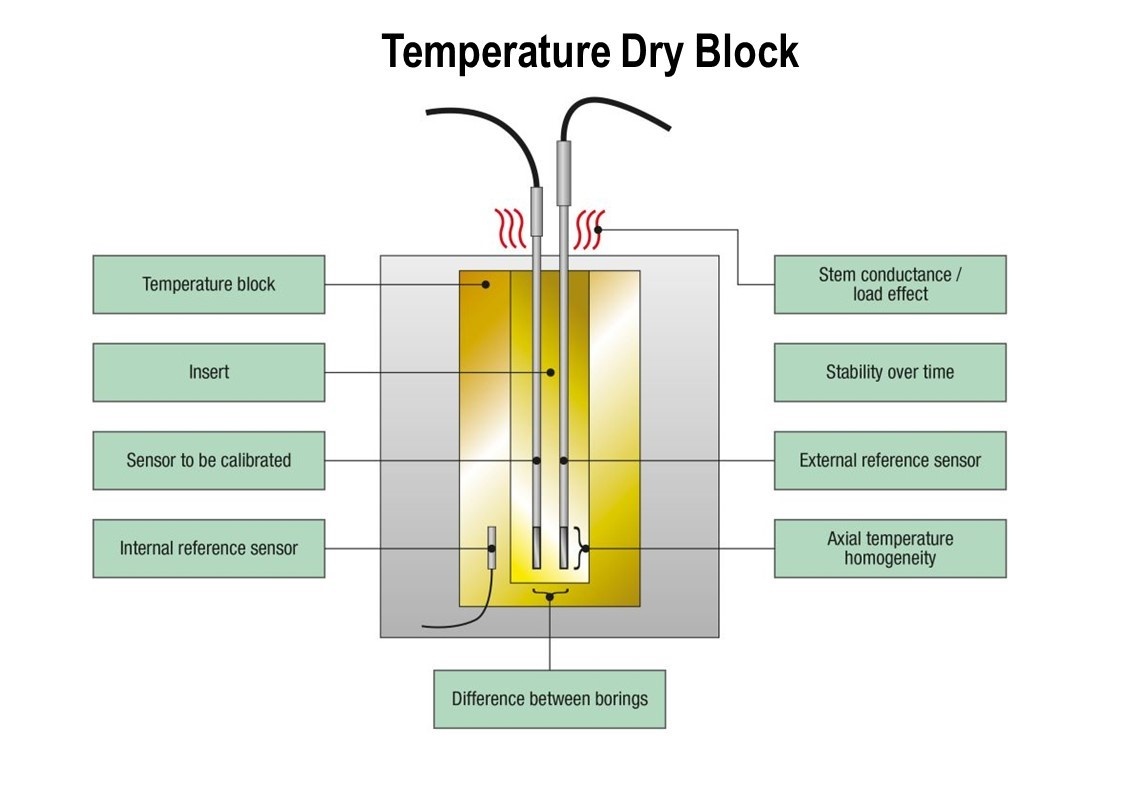

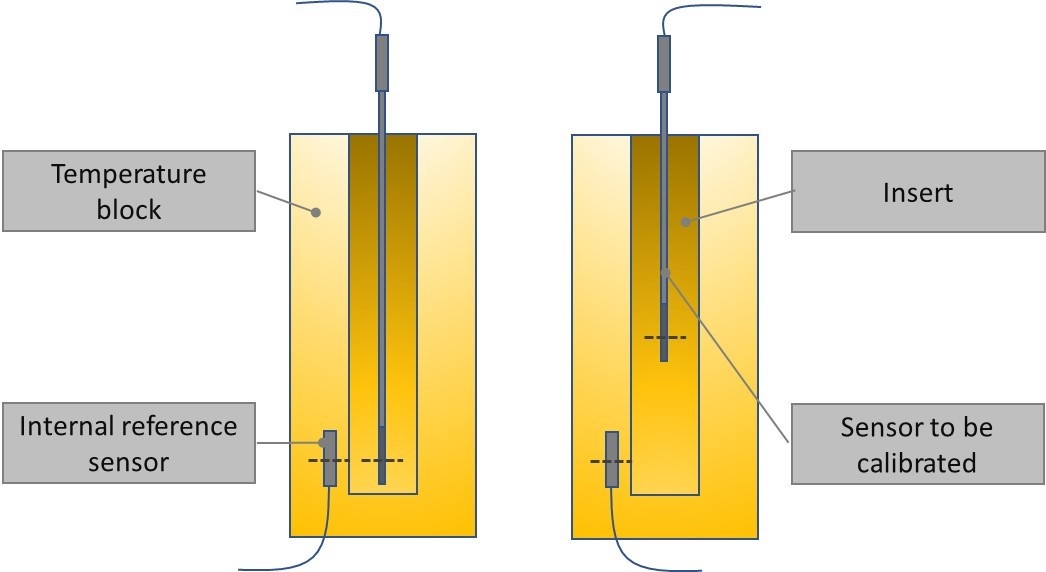

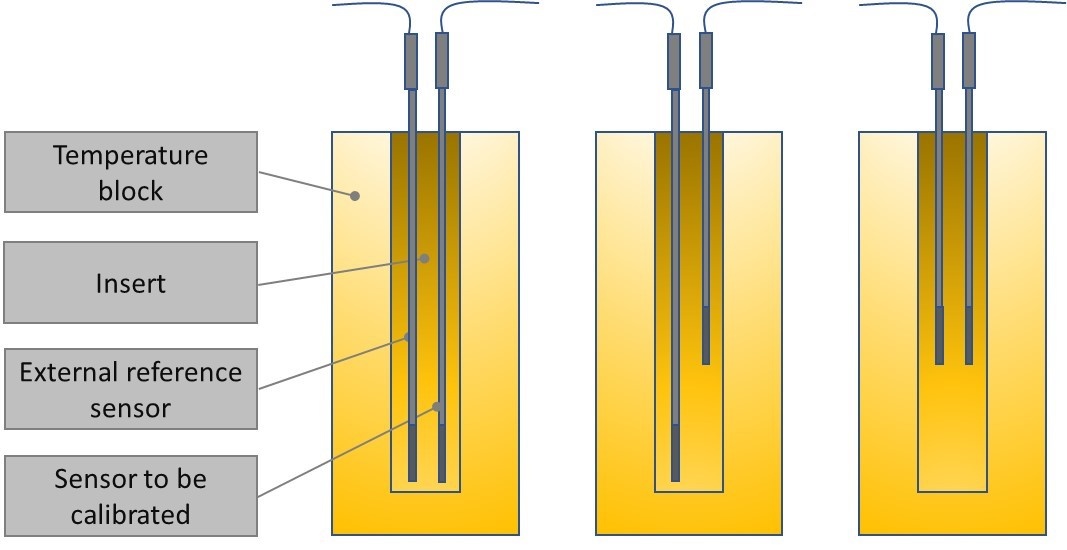

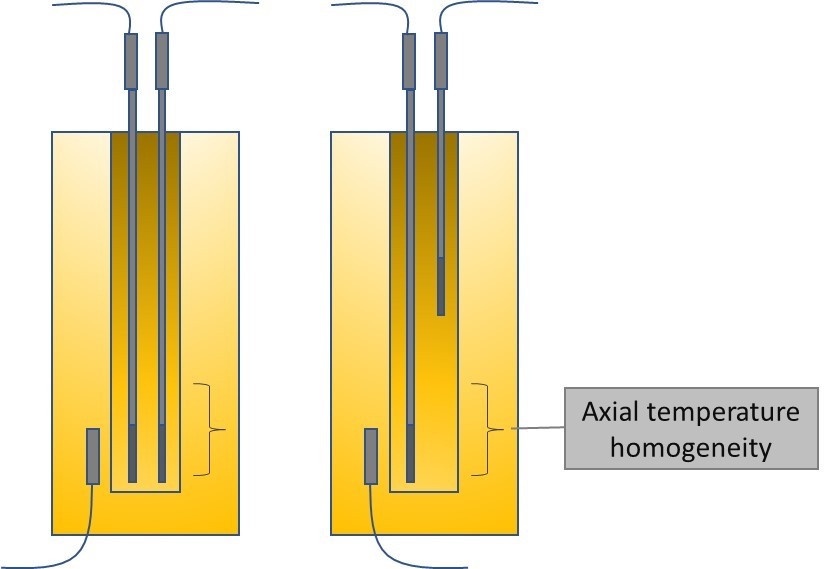

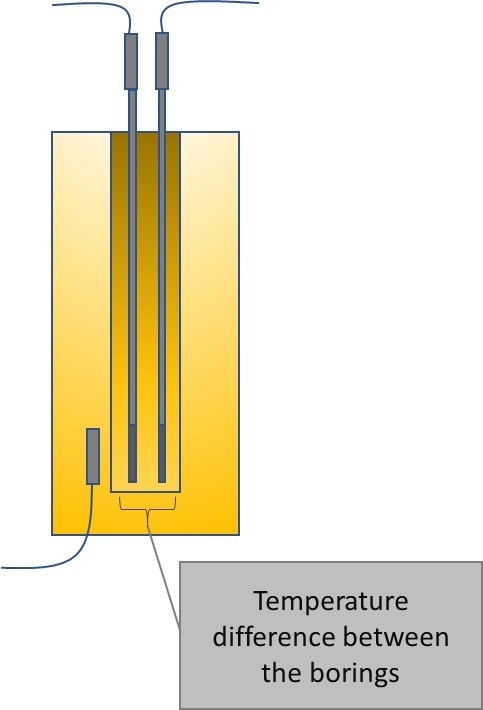

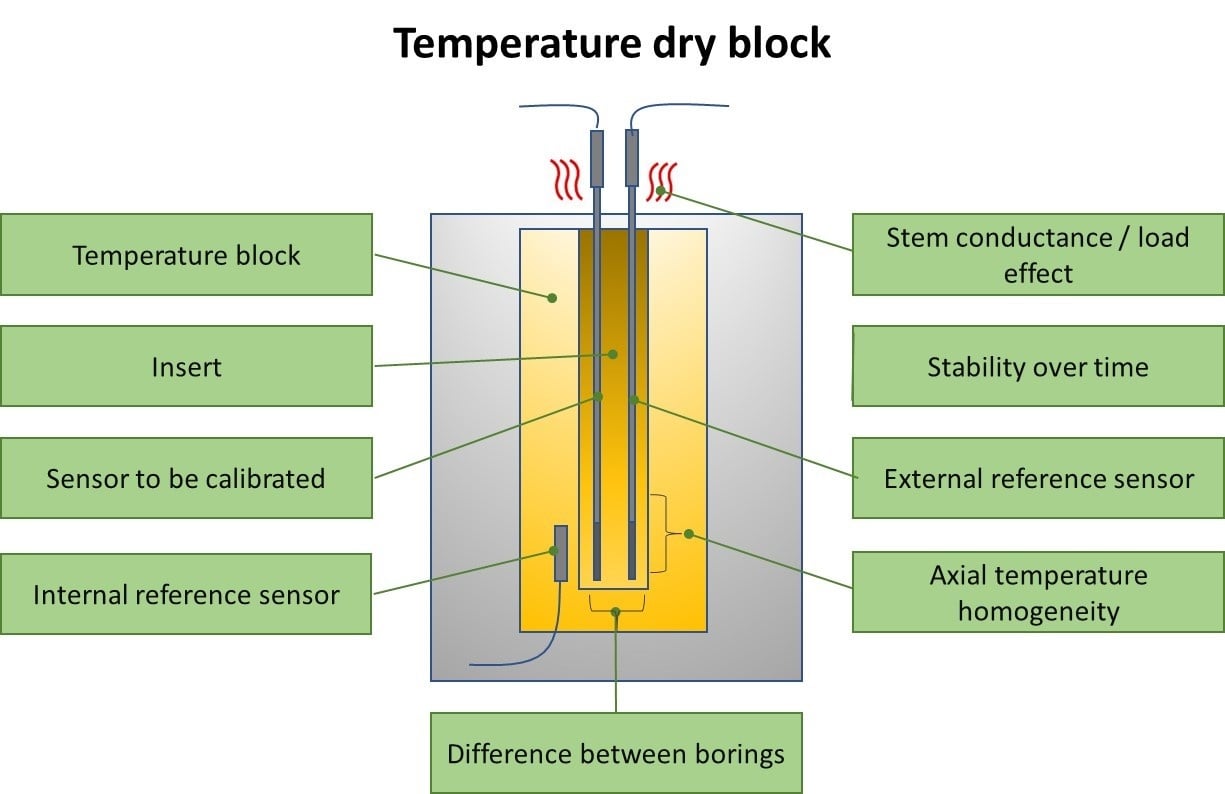

In this article, we will be covering the different uncertainty components that you should consider when you make a temperature calibration using a temperature dry block.

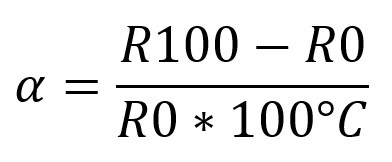

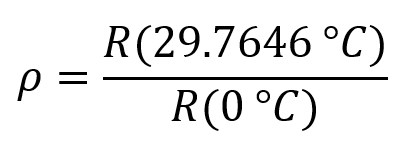

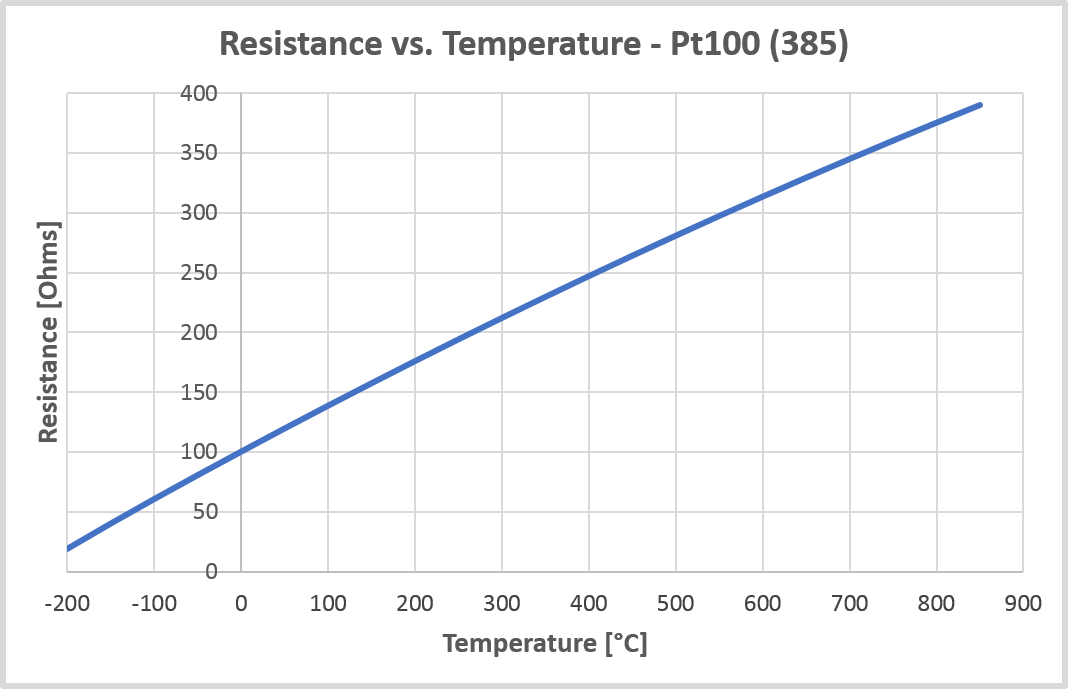

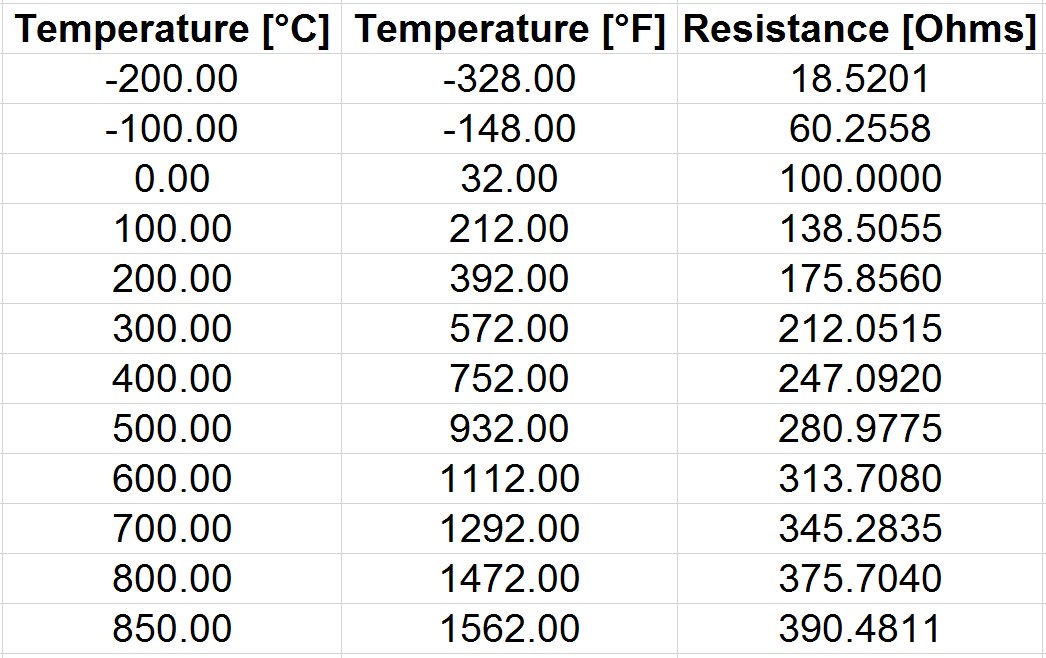

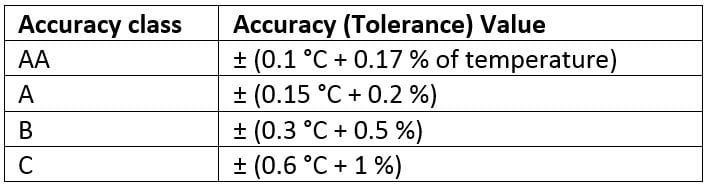

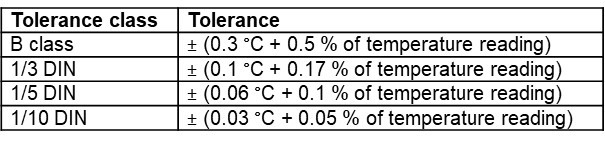

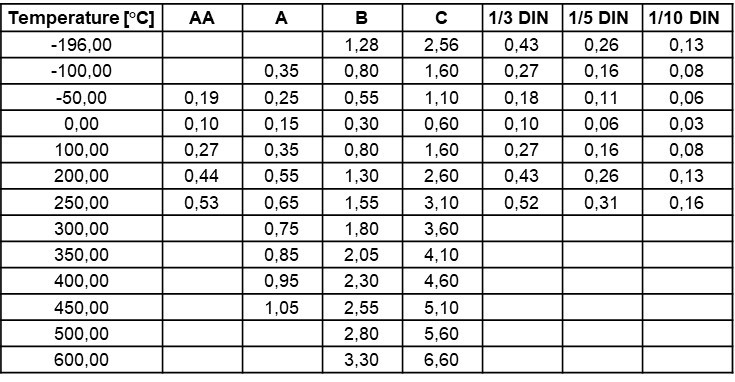

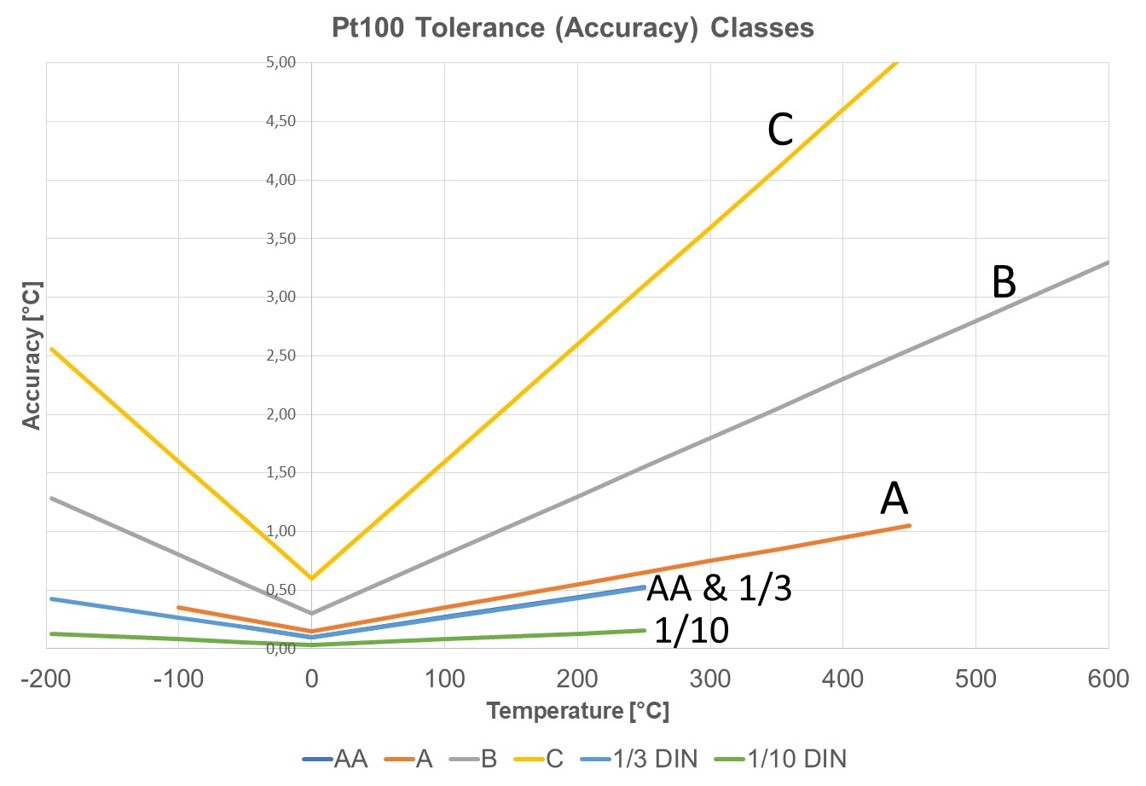

In this article, we will be covering the different uncertainty components that you should consider when you make a temperature calibration using a temperature dry block. Pt100 temperature sensors are very common sensors in the process industry. This article discusses many useful and practical things to know about the Pt100 sensors. There’s information on RTD and PRT sensors, different Pt100 mechanical structures, temperature-resistance relationship, temperature coefficients, accuracy classes and on many more.

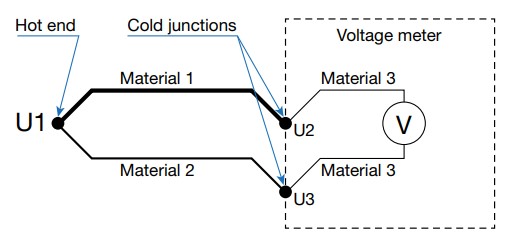

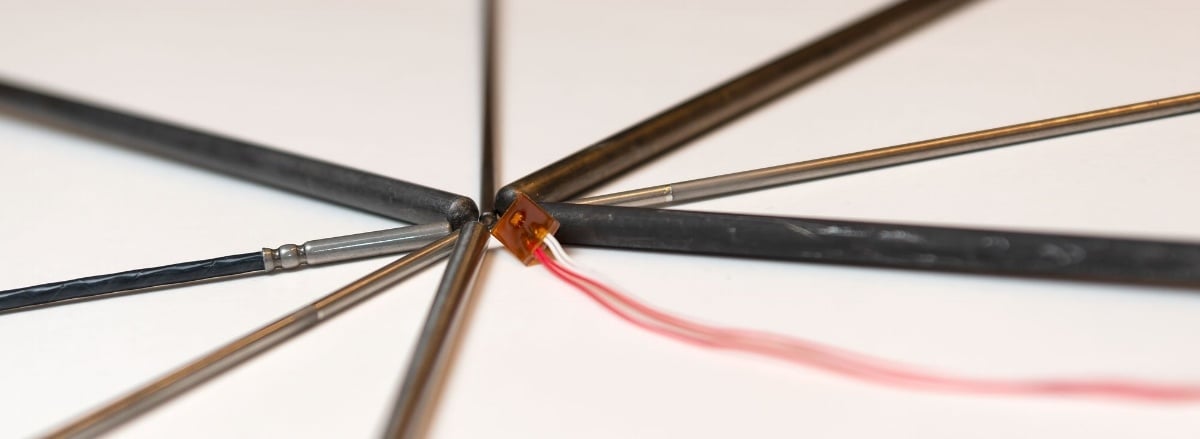

Pt100 temperature sensors are very common sensors in the process industry. This article discusses many useful and practical things to know about the Pt100 sensors. There’s information on RTD and PRT sensors, different Pt100 mechanical structures, temperature-resistance relationship, temperature coefficients, accuracy classes and on many more. Even people who work a lot with thermocouples don’t always realize how the thermocouples, and especially the cold (reference) junction, works and therefore they can make errors in measurement and calibration.

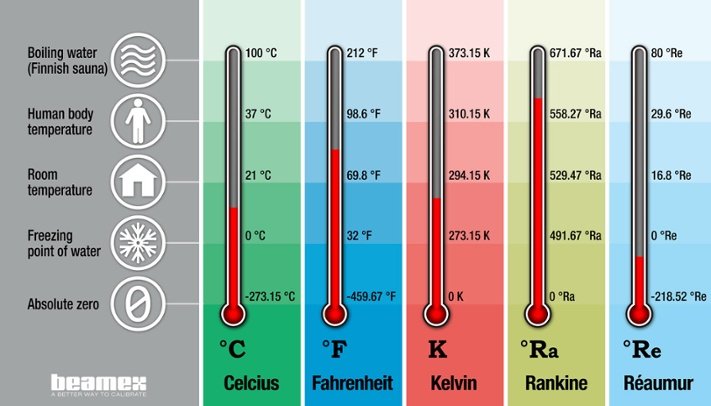

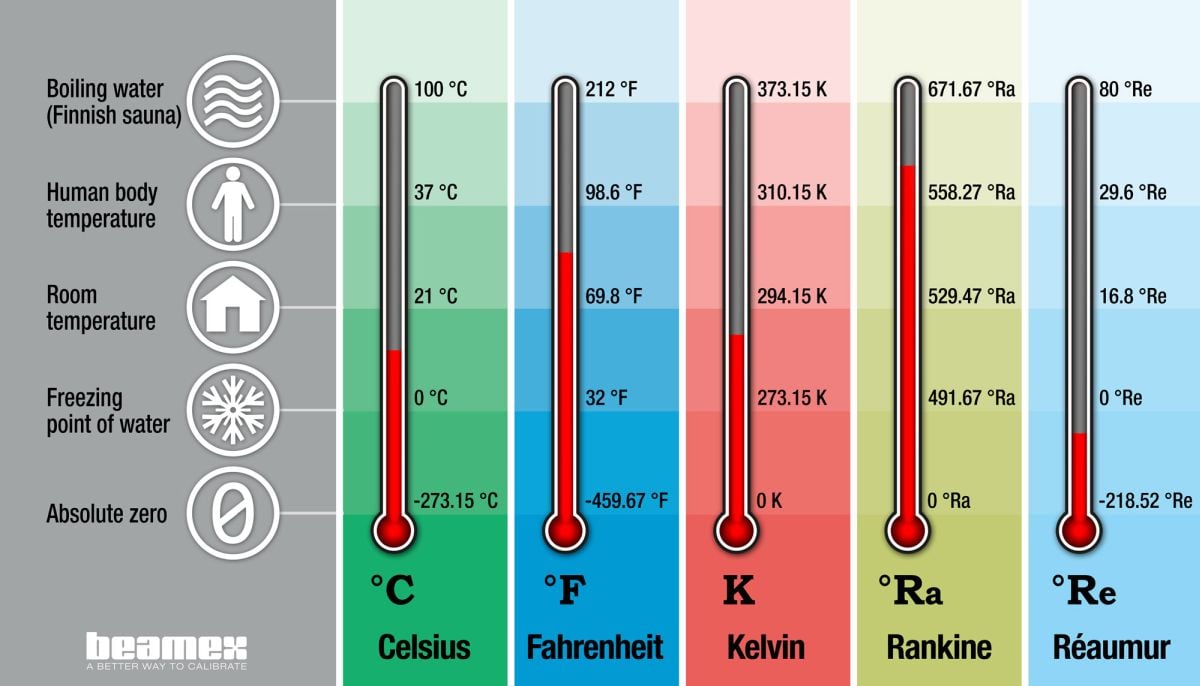

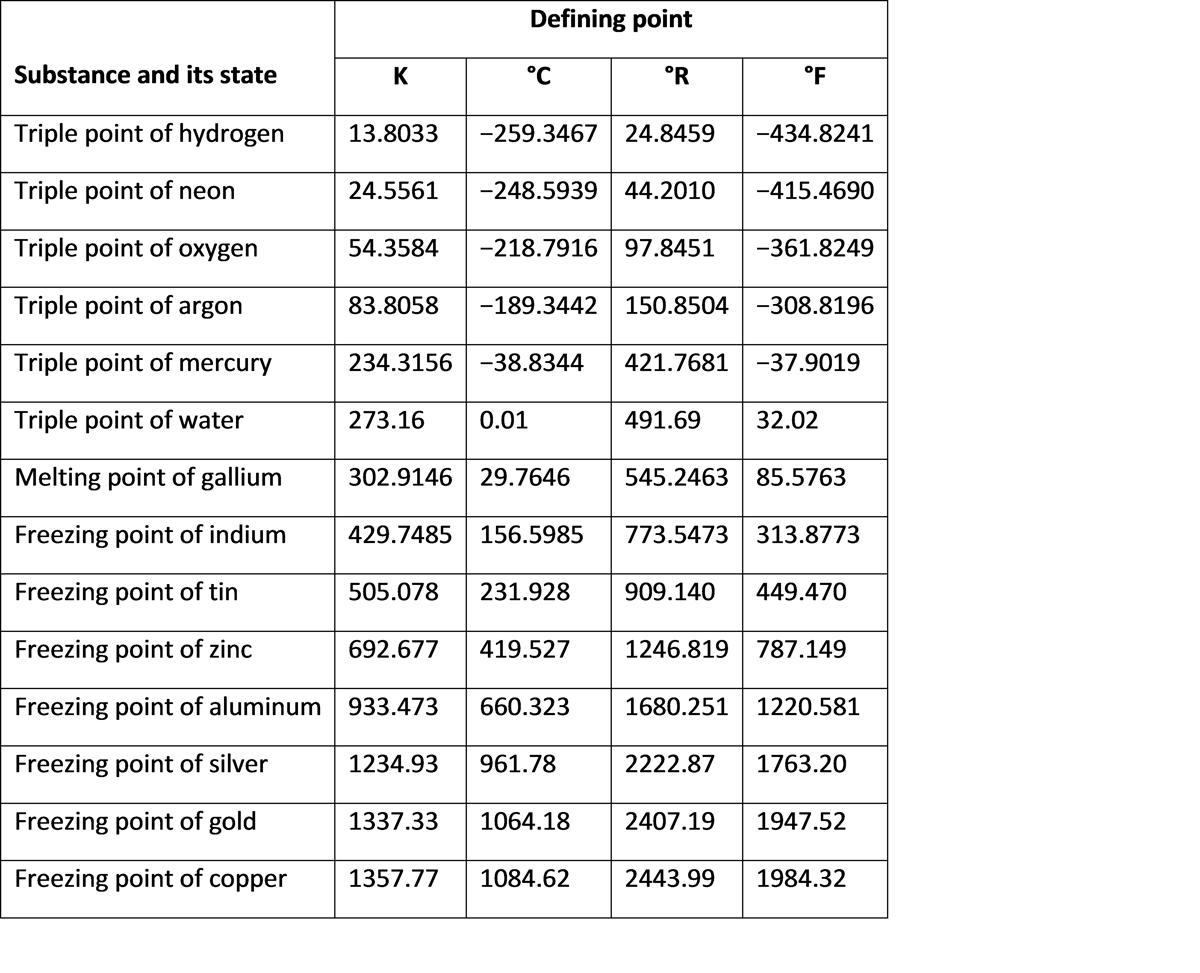

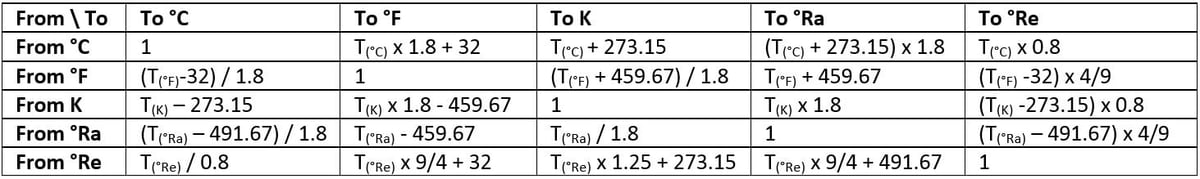

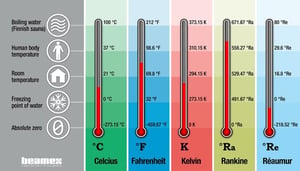

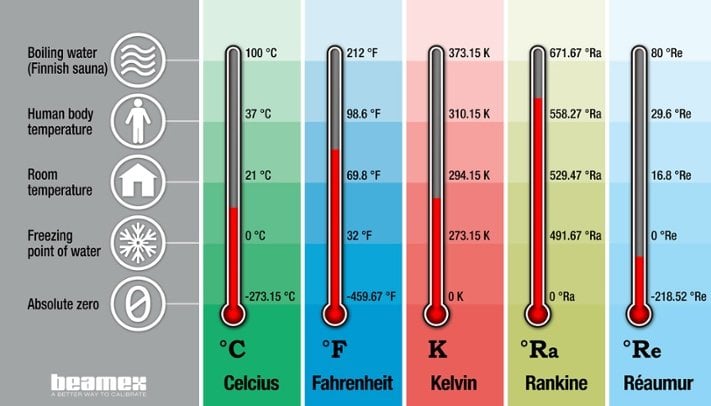

Even people who work a lot with thermocouples don’t always realize how the thermocouples, and especially the cold (reference) junction, works and therefore they can make errors in measurement and calibration. This article discusses temperature, temperature scales, temperature units and temperature unit conversions. Let’s first take a short look at what temperature really is, then look at some of the most common temperature units and finally the conversions between them.

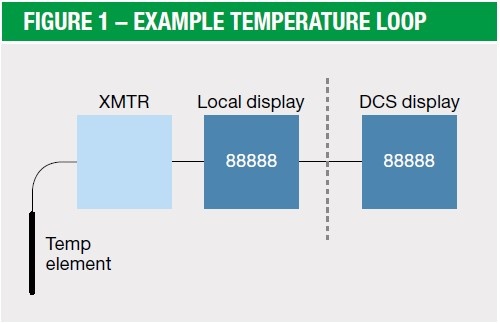

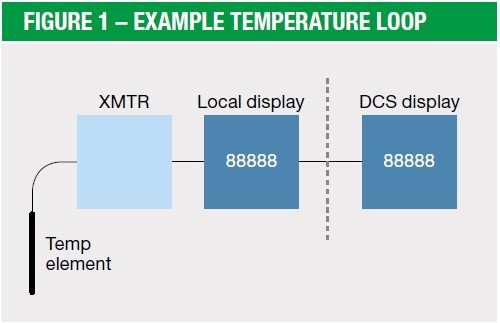

This article discusses temperature, temperature scales, temperature units and temperature unit conversions. Let’s first take a short look at what temperature really is, then look at some of the most common temperature units and finally the conversions between them. Every temperature measurement loop has a temperature sensor as the first component in the loop. So, it all starts with a temperature sensor. The temperature sensor plays a vital role in the accuracy of the whole temperature measurement loop.

Every temperature measurement loop has a temperature sensor as the first component in the loop. So, it all starts with a temperature sensor. The temperature sensor plays a vital role in the accuracy of the whole temperature measurement loop. In this article, we will take a look at the AMS2750E standard, with a special focus on the requirements set for accuracy, calibration and test/calibration equipment.

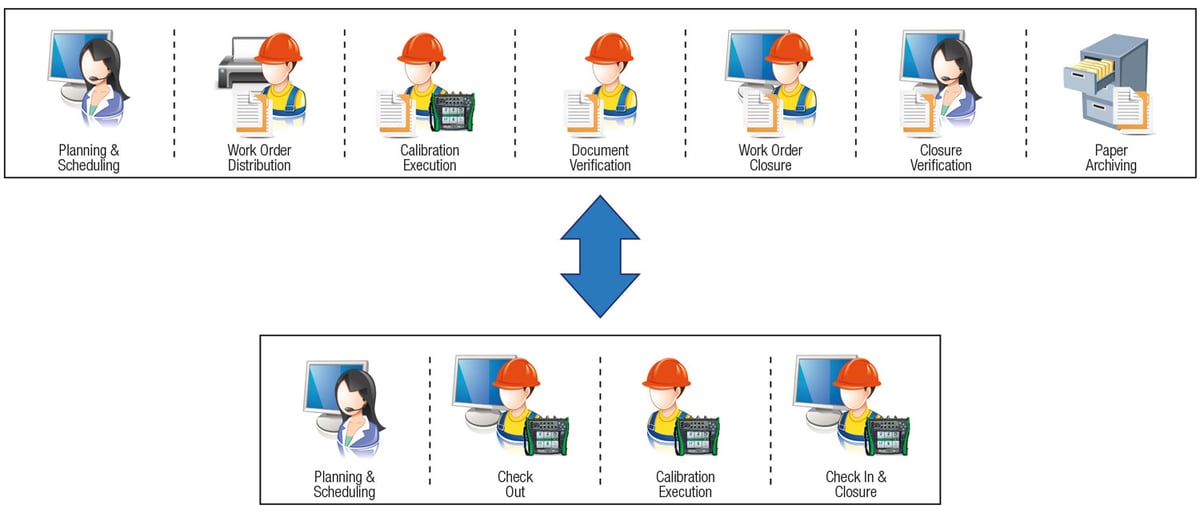

In this article, we will take a look at the AMS2750E standard, with a special focus on the requirements set for accuracy, calibration and test/calibration equipment. Most calibration technicians follow long-established procedures at their facility that have not evolved with instrumentation technology. Years ago, maintaining a performance specification of ±1% of span was difficult, but today’s instrumentation can easily exceed that level on an annual basis. In some instances, technicians are using old test equipment that does not meet new technology specifications.

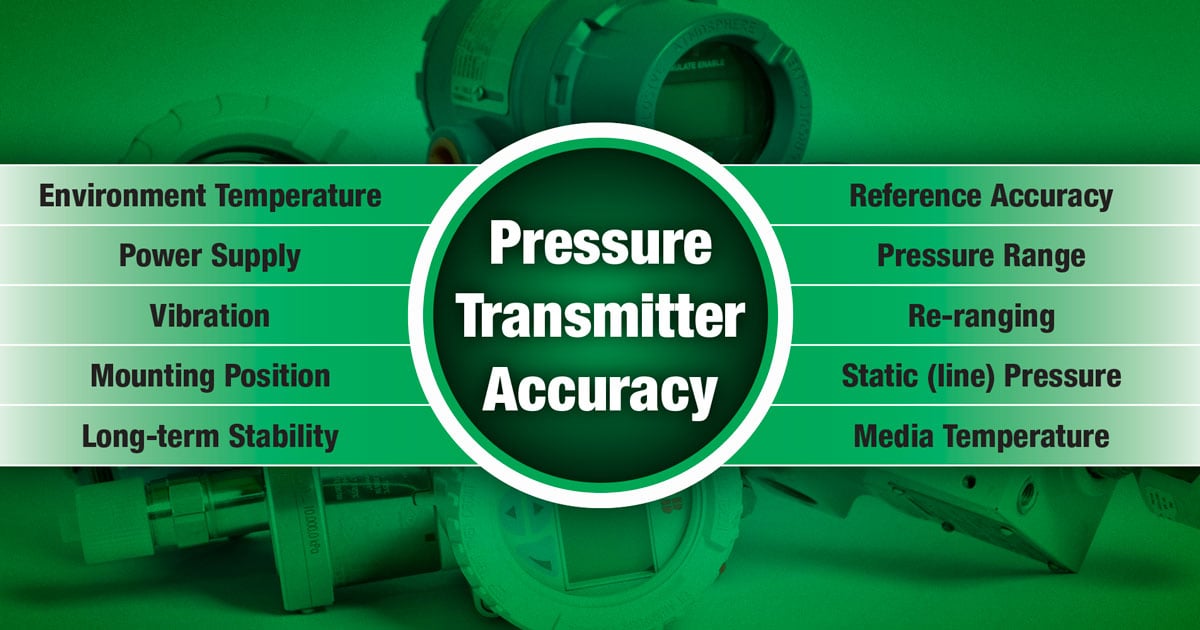

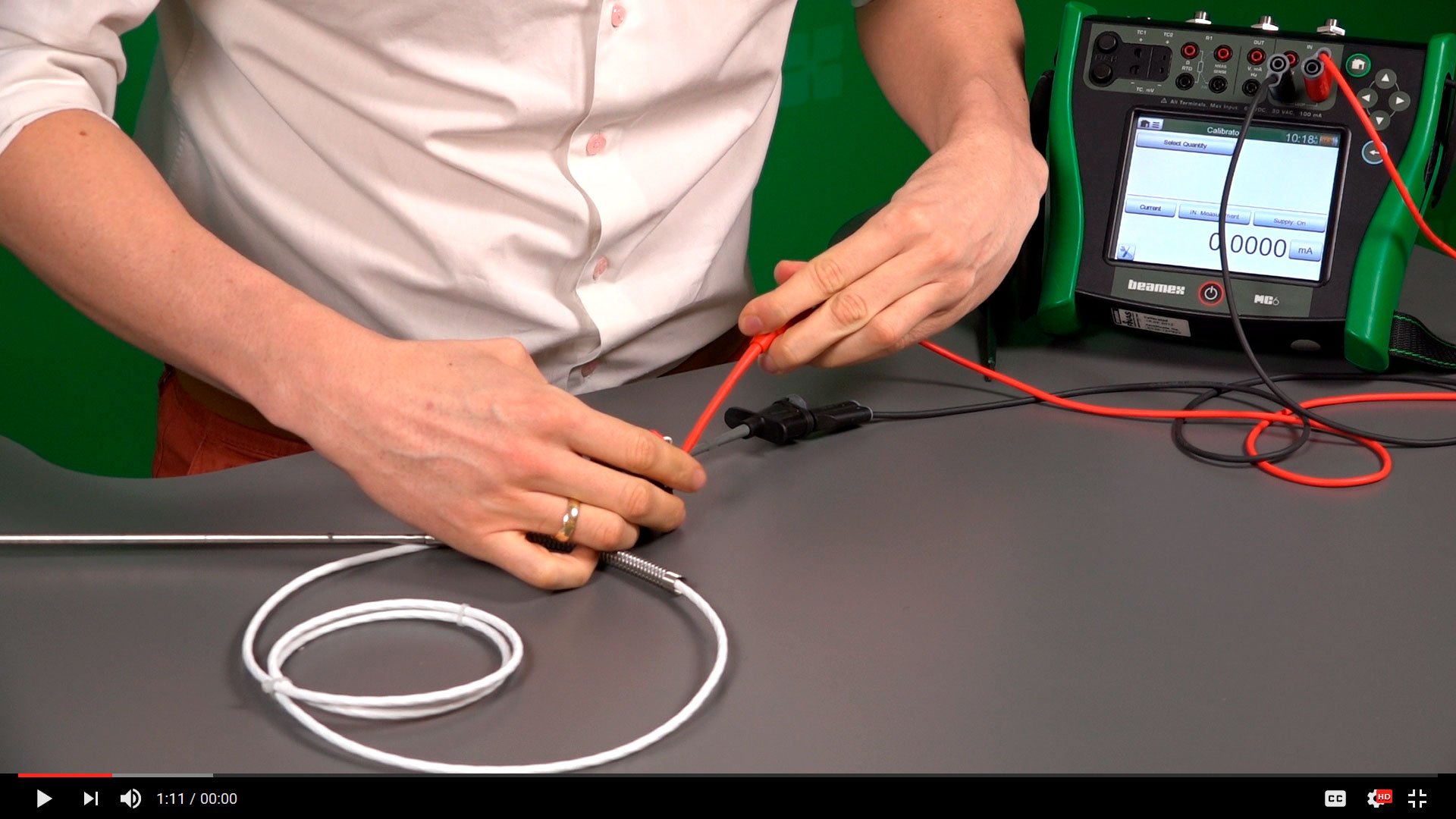

Most calibration technicians follow long-established procedures at their facility that have not evolved with instrumentation technology. Years ago, maintaining a performance specification of ±1% of span was difficult, but today’s instrumentation can easily exceed that level on an annual basis. In some instances, technicians are using old test equipment that does not meet new technology specifications.

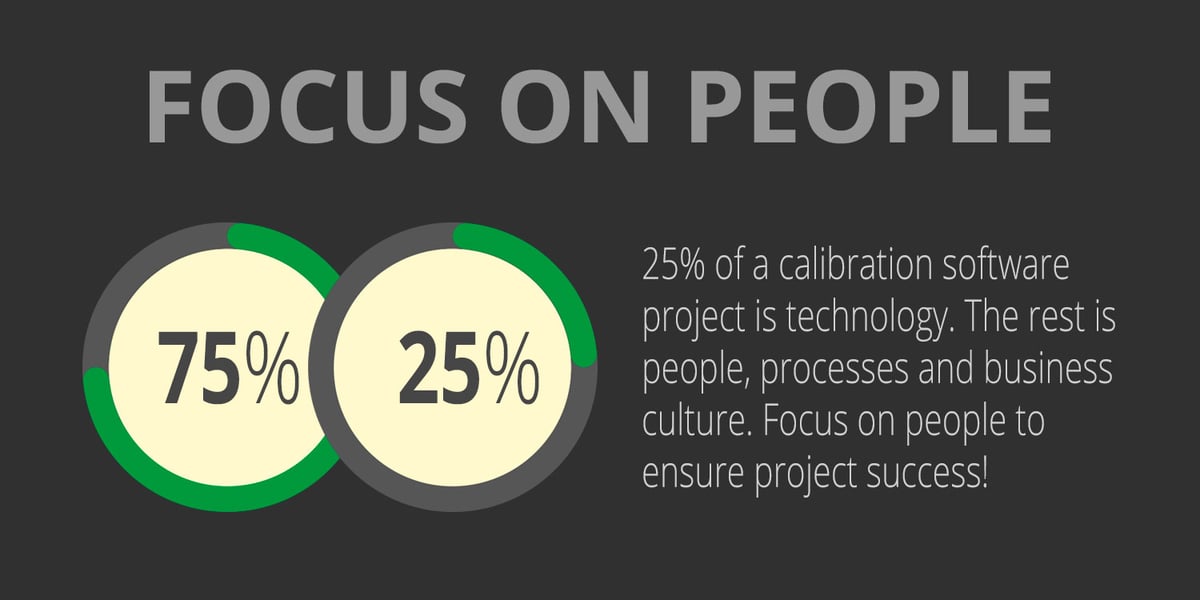

Beamex’s suite of calibration management software can benefit all sizes of process plant. For relatively small plants, where calibration data is needed for only one location, only a few instruments require calibrating and where regulatory compliance is minimal,

Beamex’s suite of calibration management software can benefit all sizes of process plant. For relatively small plants, where calibration data is needed for only one location, only a few instruments require calibrating and where regulatory compliance is minimal,  Along with CMX, the

Along with CMX, the

![Beamex blog post - Picture of SRP site - How a business analyst connected calibration and asset management [Case Story]](https://resources.beamex.com/hs-fs/hubfs/Beamex_blog_pictures/SRP%20plant.jpg?width=1200&name=SRP%20plant.jpg)

mron, a Business Analyst at Salt River Project’s corporate headquarters in Tempe, Arizona, has been serving the company for more than 40 years and has helped develop Salt River Project’s calibration processes. Several years ago, he started to investigate the possibility of linking their calibration software,

mron, a Business Analyst at Salt River Project’s corporate headquarters in Tempe, Arizona, has been serving the company for more than 40 years and has helped develop Salt River Project’s calibration processes. Several years ago, he started to investigate the possibility of linking their calibration software,

.jpg?width=1200&name=Graph%201%20-tolerance%20(0.1).jpg)

.jpg?width=1200&name=Graph%202%20-%20tolerance%20(0.25).jpg)

.jpg?width=1200&name=Graph%203%20-%20tolerance%20(1).jpg)

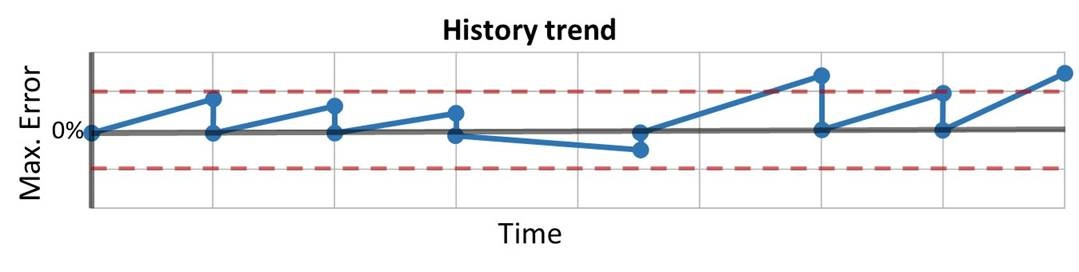

![How often should instruments be calibrated [update] - Beamex blog How often should instruments be calibrated [update] - Beamex blog](https://cdn2.hubspot.net/hub/2203666/hubfs/Beamex_blog_pictures/History_trend.jpg?width=1200&name=History_trend.jpg)

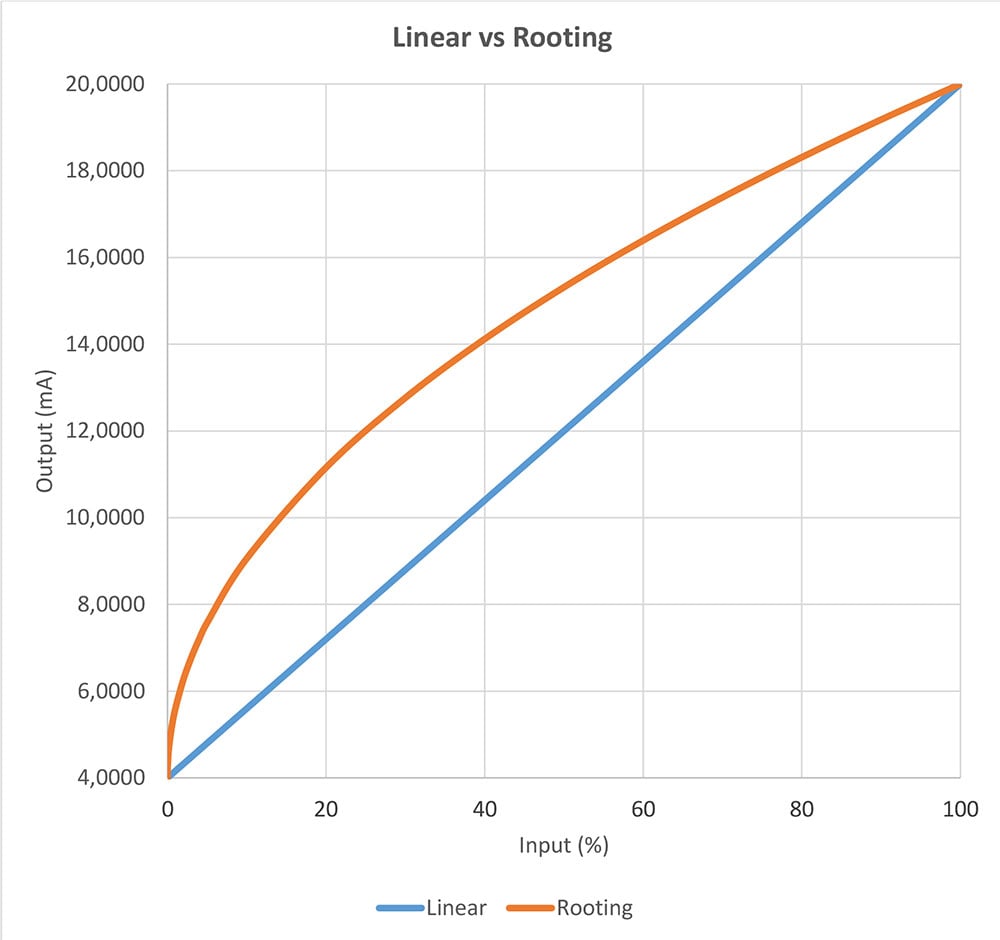

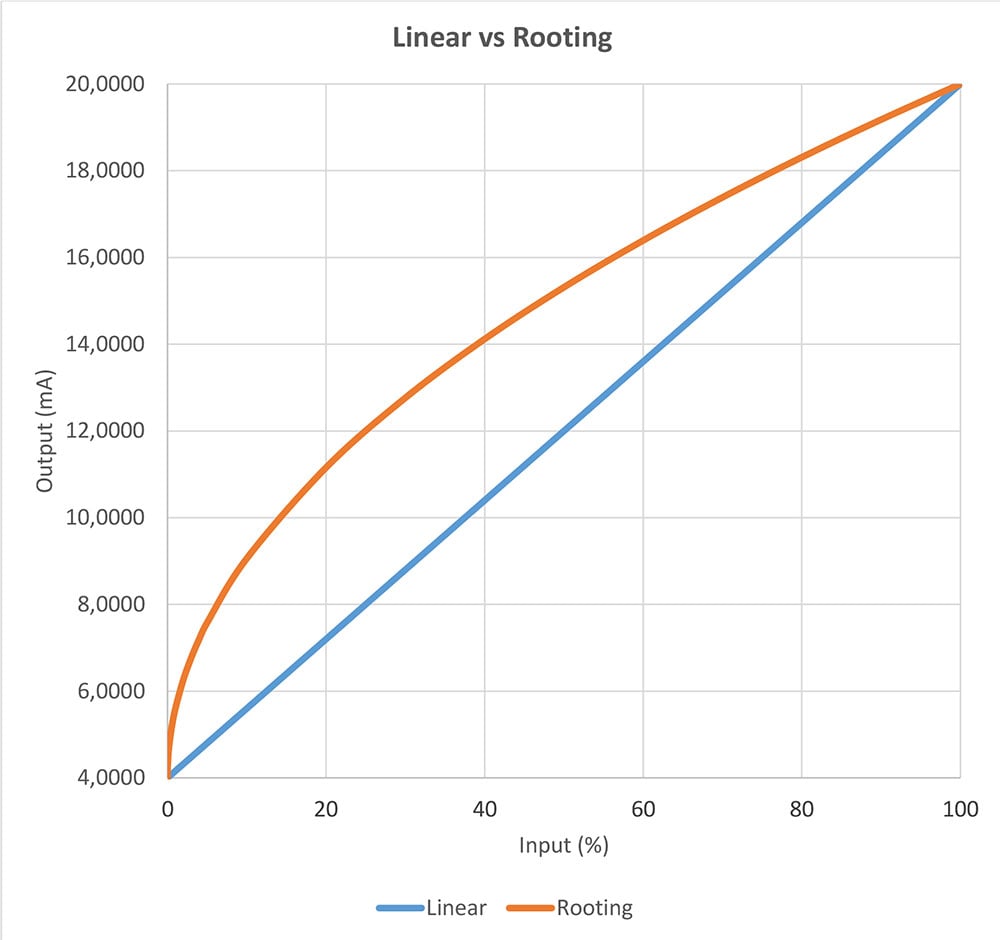

Figure 1. Linear versus square rooting.

Figure 1. Linear versus square rooting.

![Industrial Pressure Calibration Course [eLearning]](https://2203666.fs1.hubspotusercontent-na1.net/hub/2203666/hubfs/eLearning/Pressure%20elearning%20N%C3%A4ytt%C3%B6kuva%202024-11-29%20152932.png?width=300&name=Pressure%20elearning%20N%C3%A4ytt%C3%B6kuva%202024-11-29%20152932.png)

.jpg)

.png)

.png)

.png)

.png)

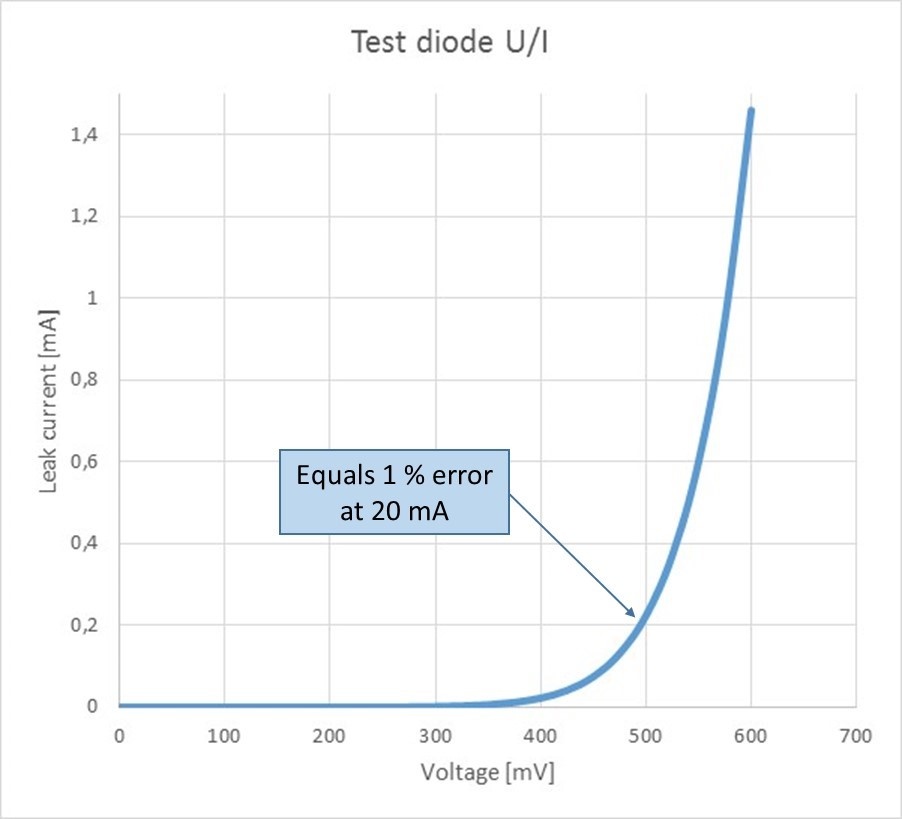

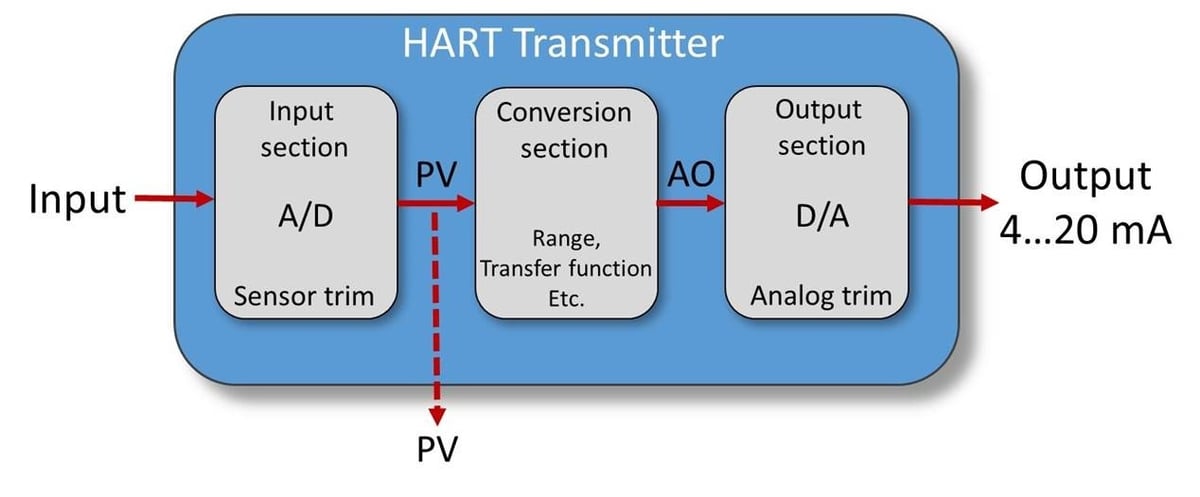

.png)