![How often should instruments be calibrated [update] - Beamex blog How often should instruments be calibrated [update] - Beamex blog](https://blog.beamex.com/hs-fs/hubfs/Beamex_blog_pictures/History_trend.jpg?width=1079&name=History_trend.jpg)

How often should instruments be calibrated? That is a question we get asked often. It would be nice to give a simple answer to that question, but unfortunately, that is not possible. Instead, there are several considerations that affect the answer of a correct calibration period. In this post, I will discuss these considerations.

In one of the very first posts in this Beamex blog, I wrote about “How often should instruments be calibrated?” Back then, the idea was to keep the posts very short, and I feel I had to leave out many important things from that post. Therefore, I decided to make an updated version of that post.

The question of how to determine the correct calibration interval remains one of our most frequently asked questions. In this post, I want to discuss both process instruments and reference standards (calibrators).

Of course, it would be nice to give one answer for the calibration interval that would be valid for all situations, but unfortunately, there is not a magic answer. Sure, on many occasions you hear that instruments should be calibrated once a year and while that can be the correct answer in some situations, it may not be fit for all purposes. There is no straight answer to this question. Instead, there are several considerations that affect the answer of the correct calibration period.

Let’s take a look at these considerations:

Process tolerance need vs. instrument accuracy

To start with, there is one thing that often bothers me. Let’s say in a process plant you have purchased a number of similar process instruments/transmitters. Sure, it makes sense to standardize the models. Then, you install these transmitters in all the different locations that need this quantity measured. The transmitter has an accuracy specification and it may also have a long-term stability specification from the manufacturer. Then you use the manufacturer’s transmitter tolerance as the calibration tolerance no matter where the transmitter is installed. This is a bad practice. The tolerance requirements of the process should always be taken into account!

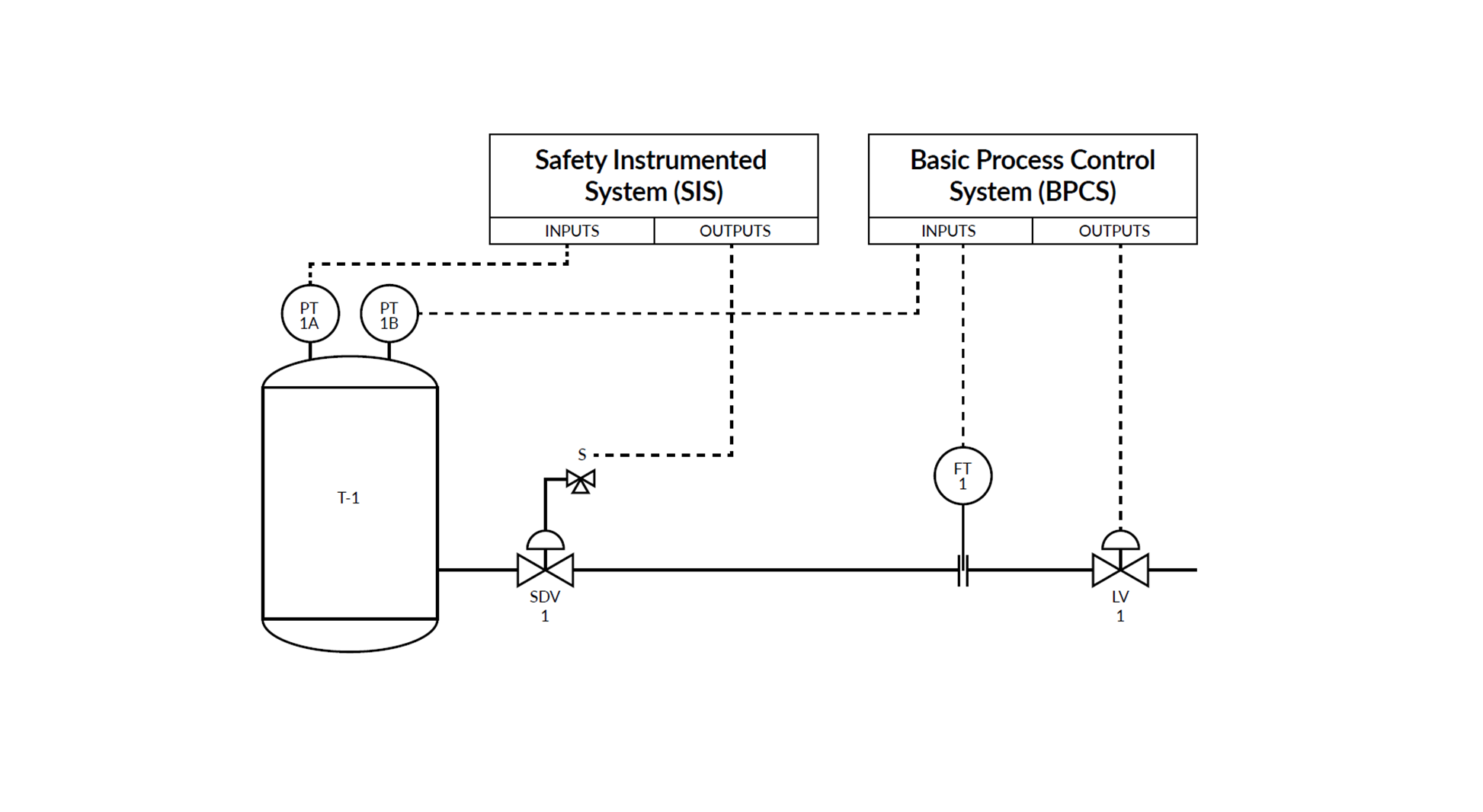

Measurement criticality

The criticality of measurement should be considered when determining the calibration period.

In some cases, the measurement instruments may be calibrated prior to an important measurement. It may also be calibrated after that measurement, to assure that it has remained accurate throughout the measurement.

Some locations are non-critical and do not require as accurate of a measurement as the transmitter’s specifications are, so these locations can be calibrated less often and the tolerance limit for those locations can be bigger than the transmitter’s specification.

But also, the other way around; some locations are very critical for the process - these locations require very accurate measurements. If the same transmitters are installed in these critical locations, they should be calibrated more often and their calibration acceptance tolerance should be kept tight enough for the critical location. The calibration tolerance can be even tighter than the transmitter's specifications, but then you need to calibrate it often enough and follow that it remains within these tight tolerance limits.

Of course, you could also buy different accuracy level transmitters for different process locations, but that is not very convenient or practical. Anyhow, the persons in the plant that best knows the accuracy requirements of different locations in the process should be consulted to make the right decision.

The tolerance of measurements should be based on process requirements, not on the specifications of the transmitter that is installed.

How accurate is accurate enough?

In the previous chapter, we discussed process instruments. The same consideration is valid also for the reference standards or calibrators.

This also works both ways; before you buy any calibrator or reference standard, you should make sure that it is accurate enough for all your most critical calibration needs. Not only for today but also in the years to come. There is no point in purchasing a calibrator that won’t be accurate enough next year or that does not suit the job; it’s just money wasted.

On the other hand, you don’t always need to buy the most accurate device in the universe. Depending on your accuracy needs, the reference standard needs to be accurate enough, but not an over-kill. Metrologically, of course, it is not harmful to buy a reference standard that is too accurate, but it may be on the expensive side.

The usability of the standard is one thing to consider. Also, some references may have multiple quantities, while others have only one.

Manufacturer’s recommendation

For many instruments, the manufacturers have a recommendation for calibration period. This is especially the case for reference standards and calibrators. Often, manufacturers know best about how their equipment behaves and drifts over time. Also, manufacturers often have specified a typical long-term stability for a given time, like for one year.

So, the manufacturer’s recommendation is an easy and good starting point when deciding the initial calibration period. Of course, over time, you should follow the stability of the device and adjust the calibration interval accordingly.

Also, depending on how good the accuracy specification of the reference standard is, you may alter the manufacturer’s recommendation. I mean that if the reference standard has a very good accuracy compared to your needs, you may calibrate it less often. Even if it fails to stay within its specifications, it may not be that critical to you. Also, the other way around – if the reference standard is on the limit of being accurate enough for you, you may want to calibrate it more often than the manufacturer recommends, as you may want to keep it in tighter tolerance than the manufacturer's specifications are.

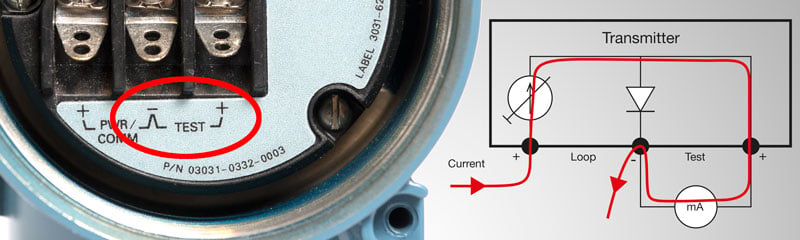

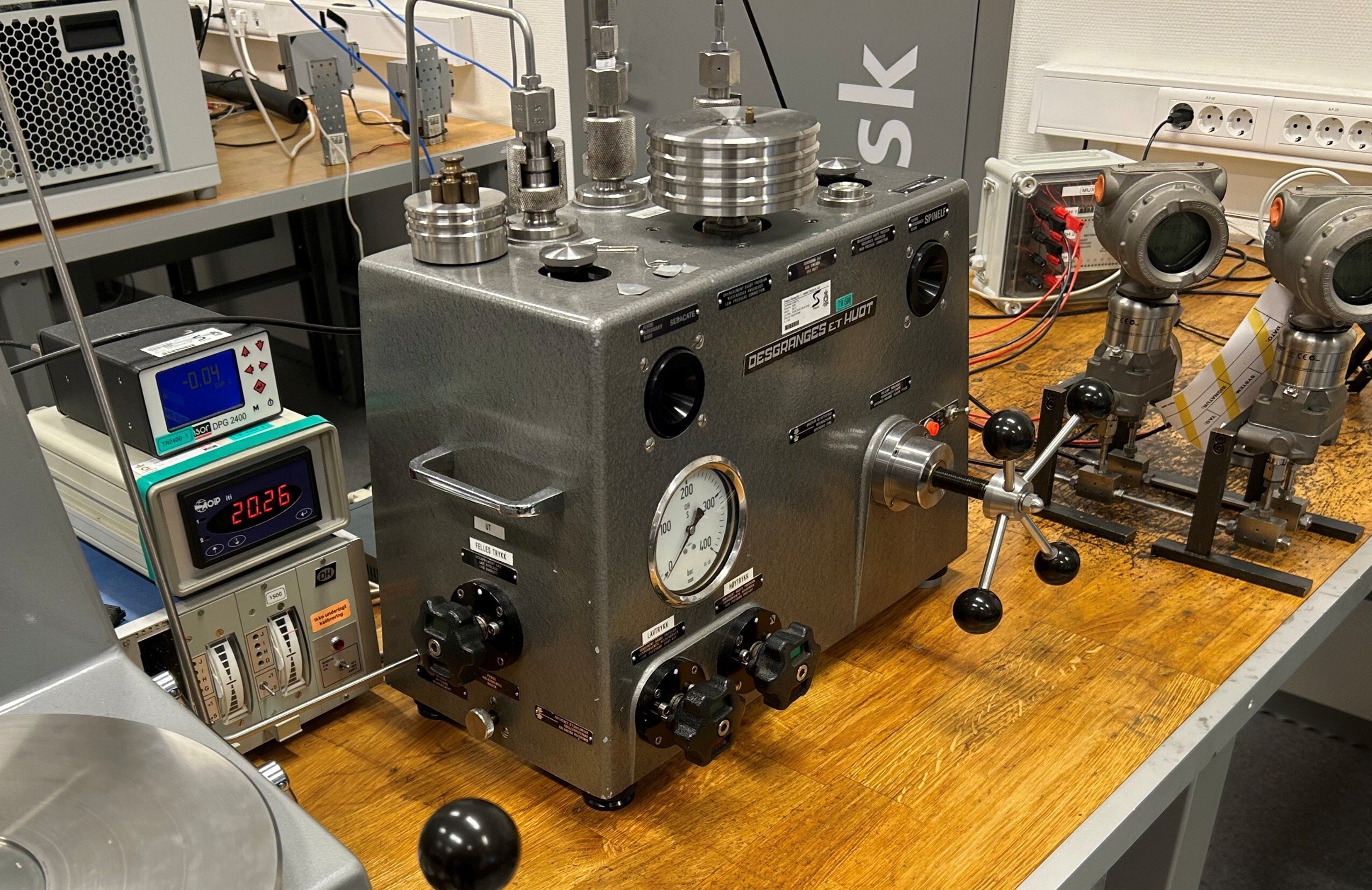

Stability history

The stability history of any measurement device is very precious information. You should always follow the stability of your measurement devices. In case the device needs to be adjusted during a recalibration, you should always save the calibration results before (As Found) and after (As Left) adjustment. If you only adjust the instrument and make a new calibration certificate, it will look like the instrument was very stable and there was drift, although that is not the truth.

If you send your instrument out for recalibration, make sure you get the calibration results before and after adjustment, if an adjustment was made. Also, make sure you know if it was adjusted.

After you acquire a longer stability history of the measurement device, you may start making changes to the calibration period. If the instrument drifts too much and often fails to meet the tolerance in recalibration, then you naturally need to make the calibration period shorter. Also, if it clearly meets the tolerance limit in every recalibration, without any need for adjustment, you can consider making the calibration period longer.

You should have an accepted, written procedure in your quality system for changing calibration periods, and also defined responsibilities.

Typically, if the stability of an instrument looks good in the first recalibration, you should still wait for a few recalibrations before making the period longer. If you plan on making the period

On the other hand, if a recalibration fails, you should shorten the calibration period immediately. Naturally, that also depends on how much it fails and how critical it is.

If you use the Beamex CMX Calibration Manager software, it will generate you history trend graphics automatically with a push of a button.

Previous experience

In the previous chapter, the stability history was discussed as an important consideration. Sometimes you already have previous experience with the stability of the instrument type that you need to set the calibration period for. Often the same types of instruments have similarities in their stability and long-term drift. So, the past experience of similar measuring instruments should be taken into account.

Similar types of instruments can have similar calibration periods, but this is not always true, as different measurement locations have different criticality, different needs for accuracy and may also have different environmental conditions.

Regulatory requirements, quality system

For certain industry measurements, there can be regulatory requirements, based on a standard or regulation, that stipulate the accepted length of the calibration period. It is difficult to argue with that one.

I've heard customers say that it is difficult to change the calibration period as it is written in their quality system. It should, of course, be possible for you to change your quality system if needed.

The cost of an out-of-tolerance (fail) calibration

A proper risk analysis should be performed when determining the calibration period of instruments.

One thing to consider when deciding on a calibration period of any instrument is the cost and consequences if the calibration fails. It is to find a good balance between the costs of the calibration program versus the costs of not calibrating enough. You should ask yourself “what will happen if this instrument fails the recalibration?”

If it is the case of a non-critical application and a fail in recalibration is not that important, then it is ok that the calibration fails from time to time. Sure, you should still adjust the instrument during the calibration to measure it correctly and to have more room for drift before the next recalibration.

If it is a critical measurement/instrument/application, then the consequences of a failure in recalibration can be really large. In the worst case, it may result in a warning letter from a regulatory body (like the FDA in the pharmaceutical industry), loss of license to produce a product, negative reputation, loss of customer confidence, physical injury to persons on the job or to those who receive a bad end product and so on. Also, one really alarming consequence is if you need to recall delivered products from the market because of an error found in calibration. For many industries, this kind of product recall is obviously a very big issue.

As an example, with the heat treatment industry, you don’t easily see if the final product is properly heat treated, but a fault in heat treatment can have a dramatic effect in the properties of the metal parts, that typically go to aerospace or automobile industry. An erroneous heat treatment can cause very severe consequences.

Certainly, pharmaceutical and food industries will also face huge consequences if poor quality products are delivered because of poor calibration or lack of calibration.

Other aspects that effect the calibration period

There are also many other aspects that will influence the calibration period, such as:

- The workload of the instrument: if the instrument is used a lot, it should be calibrated more often than one that is being used very seldom.

- Environmental conditions: an instrument used in extreme environmental conditions should be calibrated more often than one used in stable conditions.

- Transportation: if an instrument is transported frequently, you should consider calibrating it more often.

- Accidental drop/shock: if you drop or otherwise shock an instrument, it may be wise to have it calibrated afterward.

- Intermediate checks: in some cases, the instrument can be checked by comparing it against another instrument, or against some internal reference. For example, for temperature sensors, an ice-bath is a way to make relatively accurate one-point check. This kind of intermediate checks between the actual full recalibrations ads certainty to the measurement and can be used to extend the calibration period.

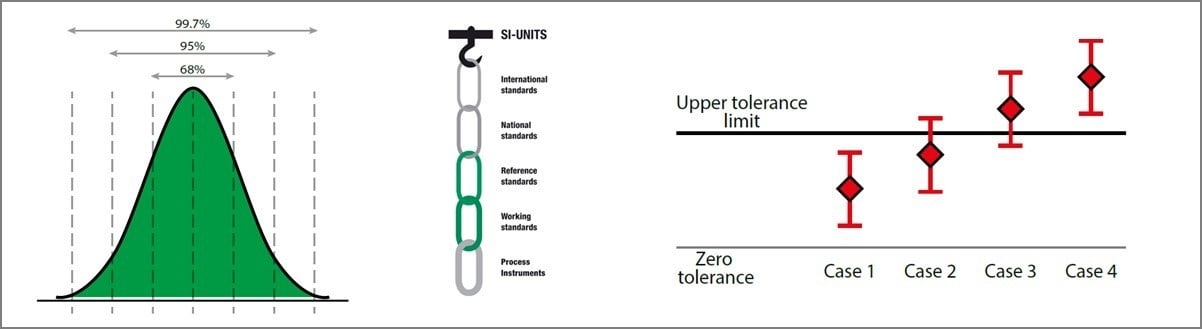

Traceability and calibration uncertainty

Finally, a couple of vital things you should remember with any calibration are traceability and uncertainty.

Shortly said, traceability means that all your calibrations (measurement instruments) must have a valid traceability to relevant national standards.

Whenever you make a measurement, you should be aware of the uncertainty related to that measurement.

If the traceability and uncertainty are not considered, the measurement does not have much value.

For more detailed information on traceability and uncertainty, please take a look at the below-mentioned blog posts:

More information on metrological traceability in calibration:

Metrological Traceability in Calibration – Are you traceable?

More information on calibration uncertainty:

Calibration uncertainty for dummies - Part 1

Also, please check out the article What is calibration on our web site.

Subscribe

PS: If you like these articles, please subscribe to this blog by entering your email address to the "Subscribe" box on the upper right-hand side. You will be notified when new articles are available, no spamming!

![How often should instruments be calibrated [update] - Beamex blog](https://blog.beamex.com/hs-fs/hubfs/Beamex_blog_pictures/History_trend.jpg?width=700&height=456&name=History_trend.jpg)

.png)

.jpg)

.png)

Discussion