This article discusses some critical items to address for a calibration program based on sound metrology fundamentals without a complete overhaul of the calibration program. Having properly calibrated process control instrumentation provides a high quality of process control, a process that will operate to design specifications, and prevents the process from being stressed as it compensates for inaccurate measurement data feeding the DCS. Realization of these benefits may be challenging to quantify and attribute to implementing any of the suggested changes, but conversely, implementation of the changes should not be extraordinarily burdensome on resources.

Introduction

The science of metrology is seemingly calm on the surface but has extensive depth of very technical concepts. Metrology is the science of measurement and incorporates aspects of many diverse fields such as mathematics, statistics, physics, quality, chemistry and computer science-all applied with a little common sense. Because metrology work is interspersed with other job duties, many rely on knowledge of metrology, but the science is intimately understood by only a small percentage. Most often a diverse educational background is found across the maintenance stakeholders in a power plant and most, if not all, of the metrology knowledge, is learned on-the-job.

Many times calibration programs are based on the minimal definition of calibration, which is comparing an instrument’s measurement to a known standard, followed by documentation of the results. With the lean staffing levels typical in for example power plant maintenance groups today, it’s natural for these programs to evolve out of expediency. This expediency, and the lack of defined metrologist roles on these

The typical Electrical & Instrumentation (E&I) Manager has responsibility

At a minimum, a competent calibration program

When instruments are calibrated with a traceable standard and the results are documented, many consider this to be adequate and no change is necessary. This position is bolstered by the very nature of how maintenance is scheduled. Months of planning go into an outage and when time to execute arrives, challenged with tight resources and tight schedules, the work must be accomplished as expeditiously as possible. All unnecessary steps must be eliminated as to not jeopardize the outage schedule. Therefore, adding steps to the process is counter-intuitive to this, which may be necessary to improve the process. E&I leadership must have the foresight to implement strategic change in order to realize the benefits of improvement.

Implementing metrology-based principles does not have to be a dramatic change. A substantial positive impact

- Measurement tolerance and pass/fail determination

- Test strategy including hysteresis

- Maintaining acceptable Test Uncertainty Ratios

- Securing information assets

Measurement tolerance and pass/fail determination

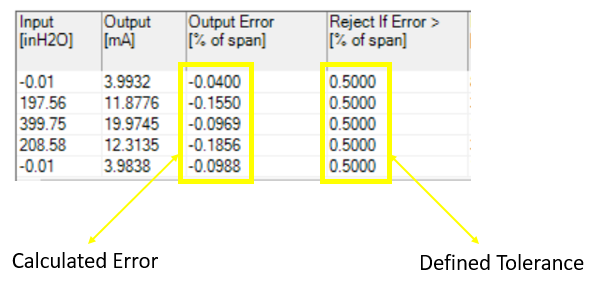

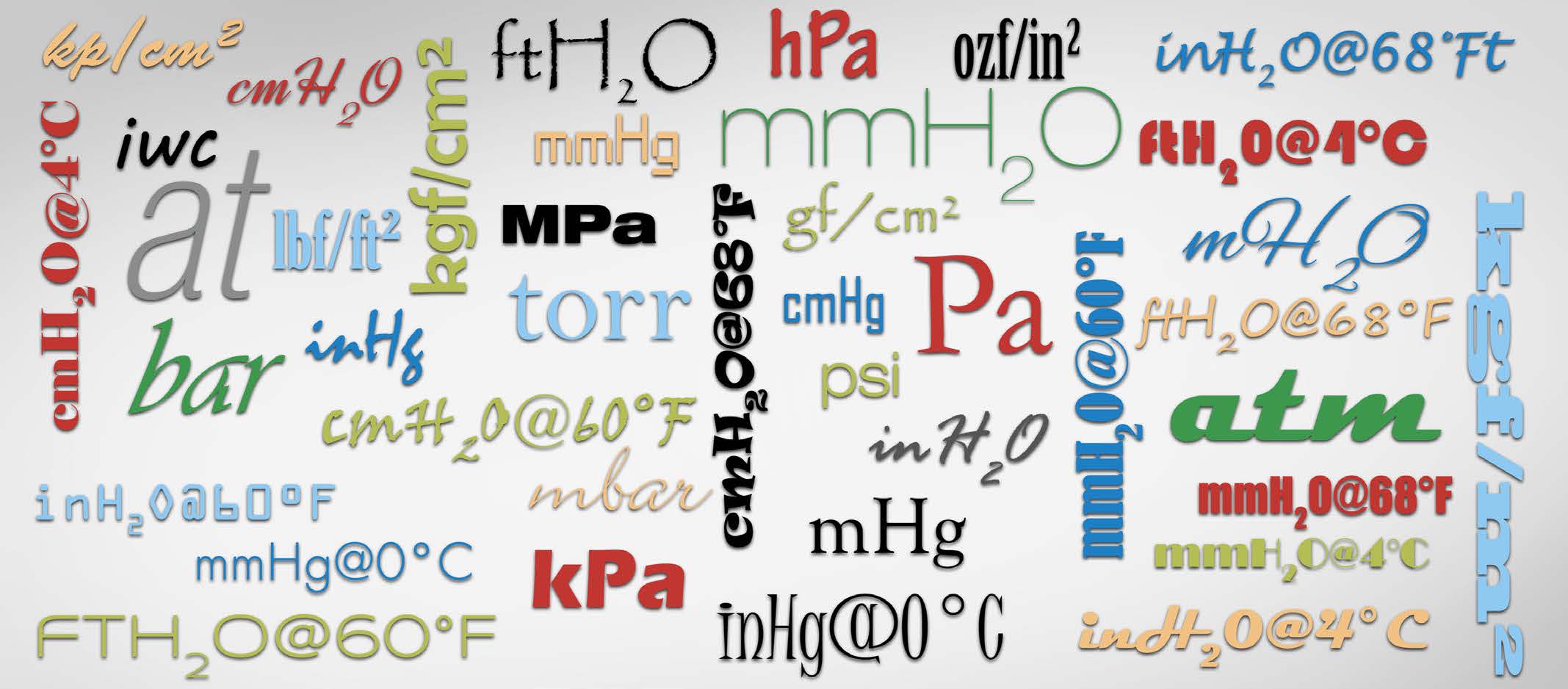

The calibration tolerance assigned to each instrument is the defining value used to determine how much measurement error is acceptable. This subject is one that should rely heavily on the requirements of the process and not by looking at what the instrument is capable of performing. Ideally, the tolerance is a parameter that is set in process development where the effect of variation is measured. Unfortunately, there is no hard-and-fast formula for developing a tolerance value, it should be based on some combination of the process requirement, manufacturers’ stated accuracy of the instrument, criticality of the instrument and intended use. Care should be taken not to set a range too tight as it will put pressure on the measurement to be unnecessarily accurate.

Tolerance can be stated as a unit of measure, percentage of

Test strategy including Hysteresis

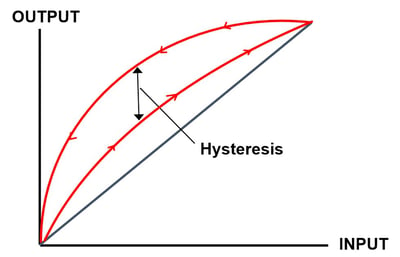

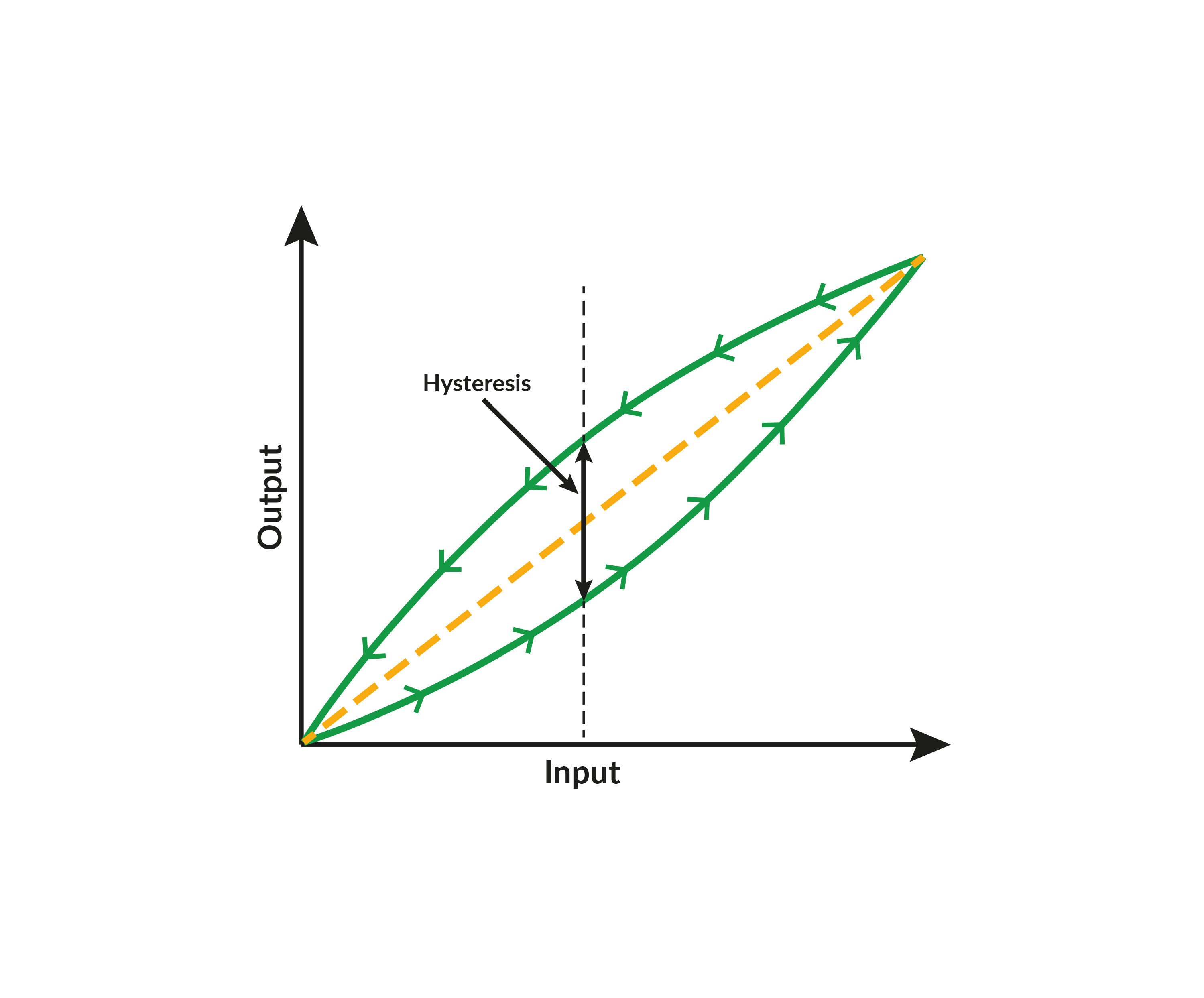

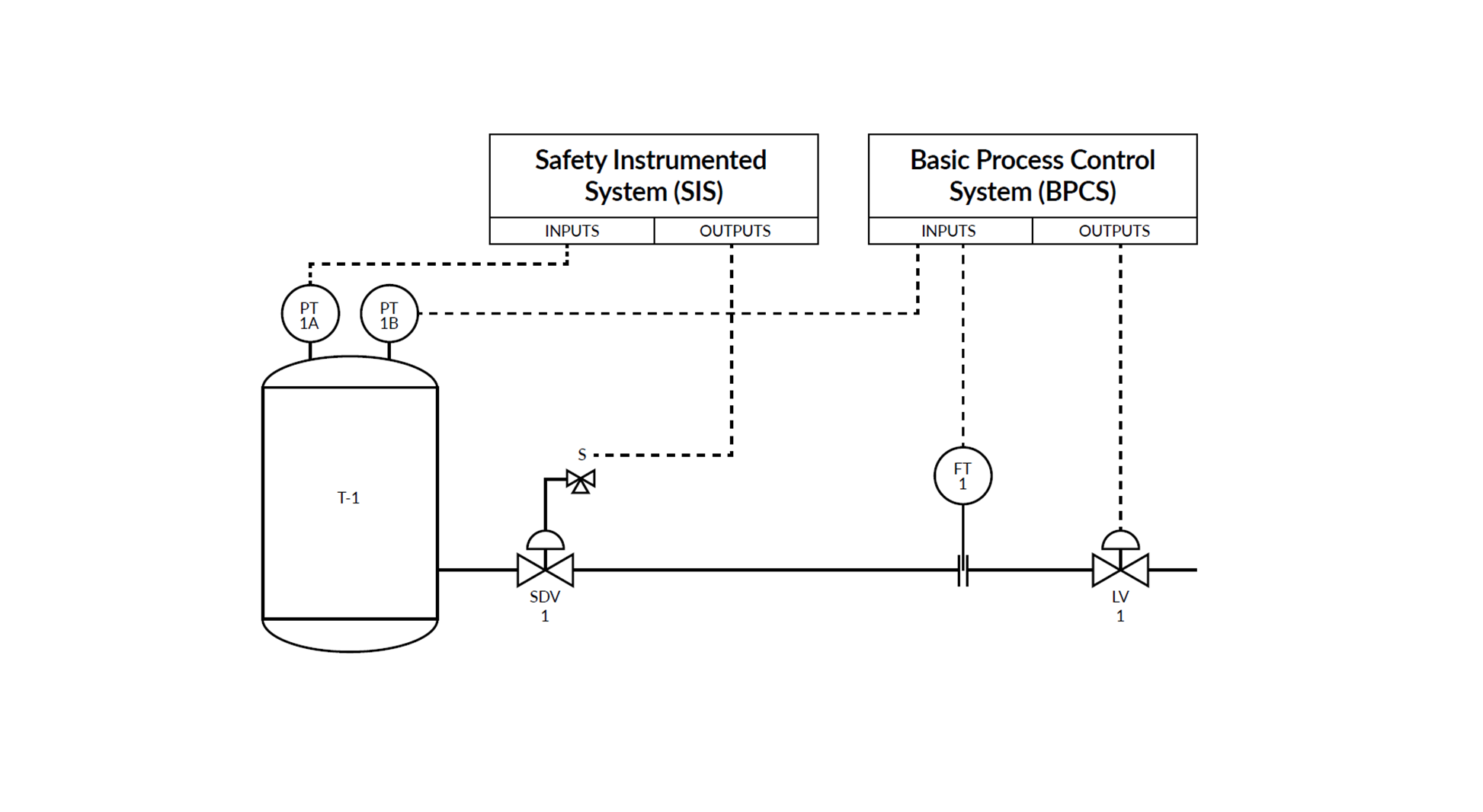

Hysteresis errors occur when the instrument responds differently to an increasing input compared to a decreasing input and is almost always caused by mechanical friction on some moving element. (See Figure 1) These types of errors rarely can be rectified by simply making calibration adjustments and typically require replacement of the instrument or correction of the mechanical element that is causing friction against a moving element. This is a critical error due to the probability that the instrument is failing.

Most calibration test strategies will include a test point at zero (0%) and a test point at span (100%), and typically is at least another test point at mid-range (50%). This 3-point test can be considered a good balance between efficiency and practicality during an outage. The only way to detect hysteresis is to use a testing strategy that includes test points up the span and test points back down the span. Critical

Maintaining acceptable Test Uncertainty Ratios

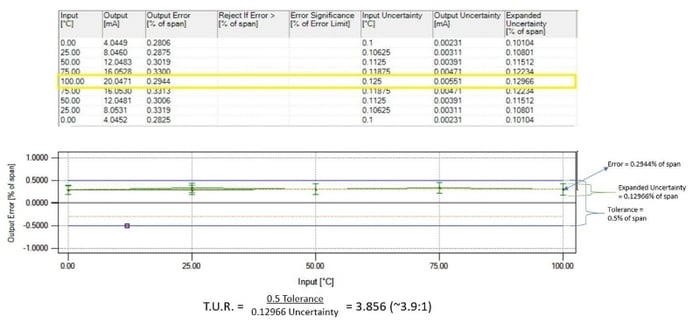

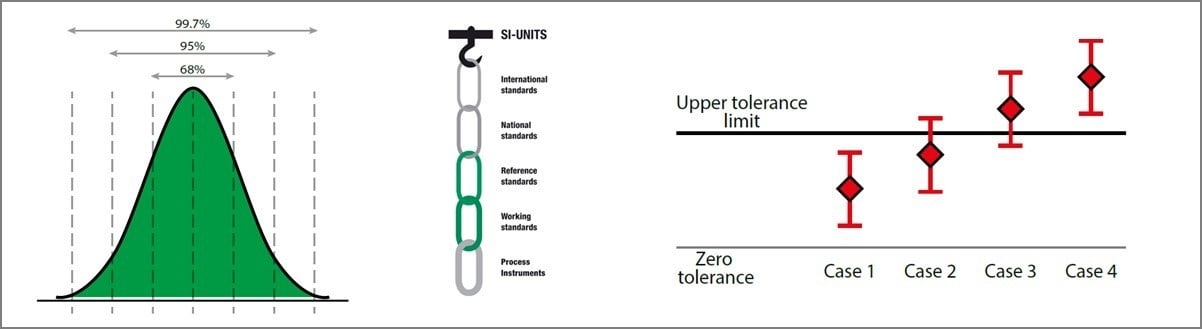

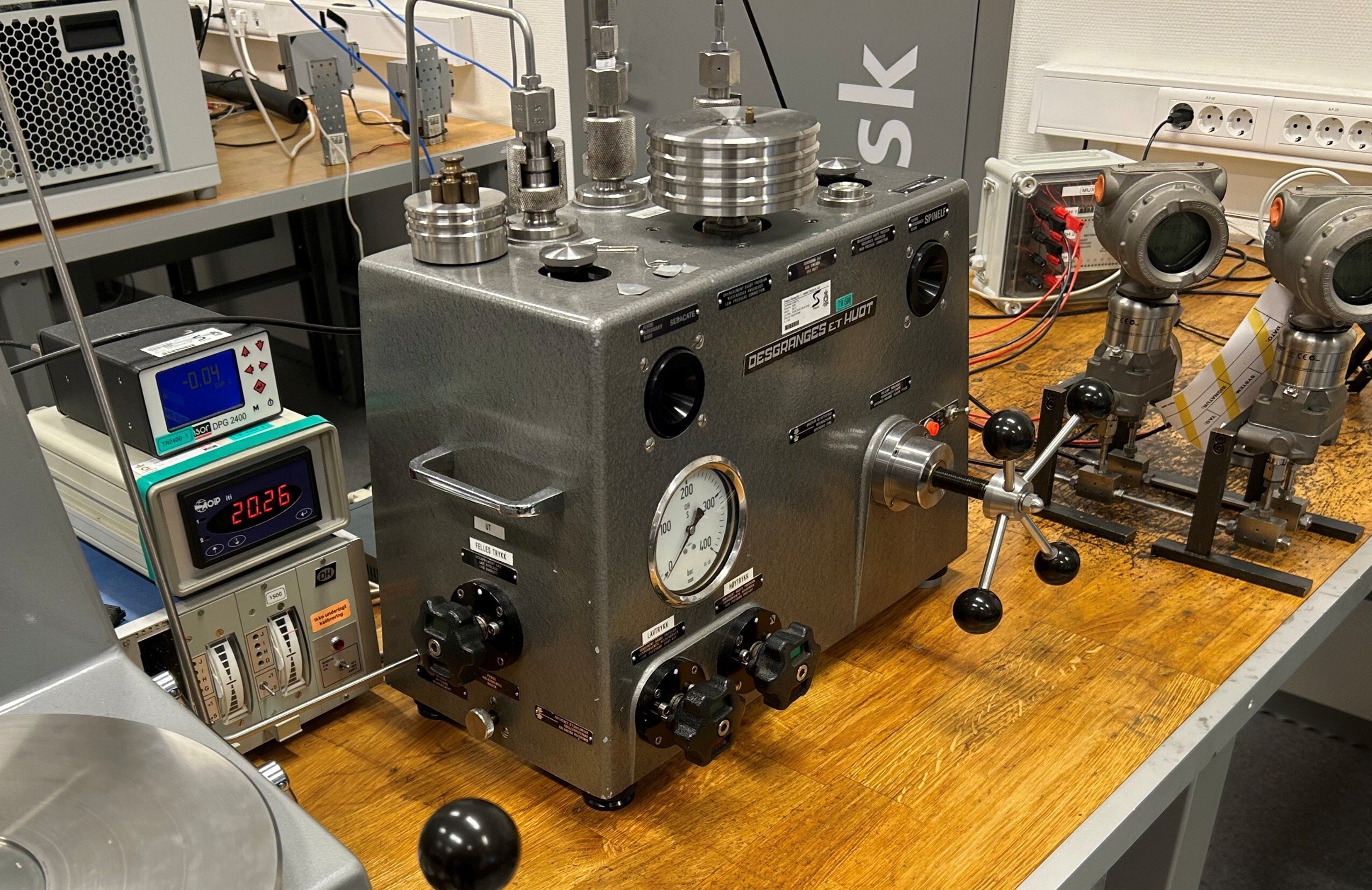

Measurement uncertainty is an estimate of the error associated with a measurement. In general, the smaller the uncertainty, the higher the accuracy of the measurement. The uncertainty of the measurement standard (i.e. – calibrator) is the primary factor considered along with potential errors introduced in the calibration process to get an estimation of the calibration uncertainty, typically stated at a 95% confidence level (k=2). The comparison between the accuracy of the instrument under test and the estimated calibration uncertainty is known as a Test Uncertainty Ratio (TUR).

The instrument measurement is within tolerance if the uncertainty of the standard used to calibrate the instrument is known. Once the tolerance is defined, a good rule of thumb is that the measurement standard does not exceed 25% of the acceptable tolerance. This 25% equates to a TUR of 4:1; the standard used is four times more accurate than the instrument being checked. With today's technology, a TUR of 4:1 is becoming more difficult to achieve, so accepting the risk of a lower TUR of 3:1 or 2:1 may have to be considered.

Another challenge in many plants

Securing information assets

Information is one of the company’s most valuable assets, but less so if

Harnessing this type of information should be a top priority as the metrology data clearly provides

Conclusion

The novelty of calibration and metrology alone has inherent complexities. Metrology is a specialized and highly technical subject, and metrology subject matter experts make up a fraction of the overall population of maintenance personnel in the power generation industry. Whereas the desire to maximize electrical output requires maximum availability, reliability

Transforming the calibration program doesn’t have to be a resource intensive,

The specific subject areas highlighted in this paper were selected because they are often overlooked, based on Beamex experience working with various power plants. Corrective action taken

Download this article as a pdf file by clicking the picture below:

Related blog articles

Other blog articles related to this topic you might be interested in:

- Metrological Traceability in Calibration – Are you traceable?

- Calibration uncertainty for dummies

- How to implement calibration software

- How a modern calibration process improves power plant performance

- The key aspects of building a calibration system business case

Please browse the Beamex blog for more articles.

Related Beamex offering

Please check the Beamex web site for products and services we offer to help you.

.jpg)

Discussion